The Classification of Humankind, and the Birth of Population Science

It was Thomas Malthus — the British cleric and political economist, often railed against, rarely actually read — who cast a long shadow over thinking about our future. Writing around the turn of the 1800s, Malthus suggested starkly that “the power of population is so superior to the power in the earth to produce subsistence to man that premature death must in some shape or other visit the human race.”

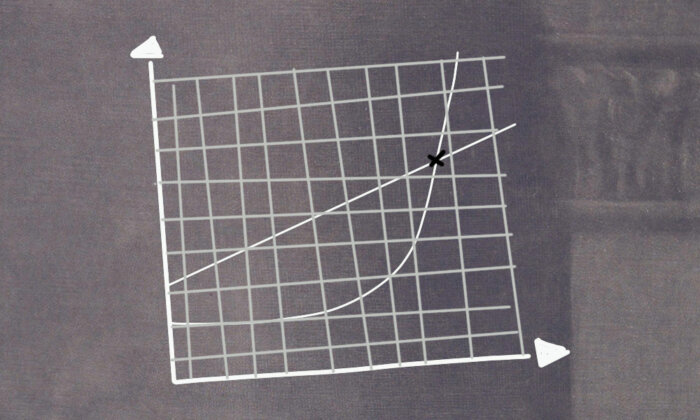

He was talking not about extinction, but the natural paring back of populations. Indeed, Malthus remained unconcerned about outright extermination because he was so convinced of the natural tendency of populations to expand explosively, leading to poverty and starvation. A population tends to overshoot its means of subsistence, he noted, precisely because it grows in a nonlinear or exponential fashion (while growth in the availability of sustenance, he thought, tends to grow in a linear or arithmetic fashion).

While others made similar claims before him — Machiavelli, for example, asserted that population will expand until “the world will purge itself” through means of plagues or famines — Malthus’s pessimistic provocation provided fertile and innovative ground for later engineers, futurists, and optimists alike.

Malthus had, ironically, only foreshadowed later strands of anti-Malthusian thinking when he hinted, in 1798, that the “germs of existence contained in this spot of earth, with ample food and ample room to expand in, would fill millions of worlds in the course of a few thousand years.” He intended this as an impossible supposition, but many futurists since have proposed interplanetary diaspora as a genuine solution to limits to growth.

Indeed, ‘Malthusian principles’ provided the background provision for Freeman Dyson’s 1960 suggestion for building energy-sapping spheres around the sun: Any maturing technological civilization, Dyson supposed, would eventually bump up against the carrying capacity — or material limits — of its home planet, thus spurring it to increase its resource reservoir by damming up the otherwise wasted irradiance of its host star.

Malthus’s pessimistic provocation provided fertile and innovative ground for later engineers, futurists, and optimists alike.

Population expansion can be compared to a comet’s path, in that both are curves with changing rates of change. The ‘geometrical growth’ that was the basis of Malthus’s doomy prognosis was premised upon the mathematical principle that one could extrapolate such functions far into the future. This is what has allowed scientists, since the 1600s, to predict birthrates as well as the return of comets. Indeed, Malthus had been preceded by the great mathematician Leonhard Euler, in 1748, in using exponentials to map population dynamics. But in fact the very idea of population itself had emerged as a side effect of the advances of probability theory in the latter decades of the 1600s and opening of the 1700s.

Discovering the Human Species

Under the name of political arithmetic, the study of demography initially arose as an arena of scientific inquiry via applications of early probability theory to mortality rates in the late 1600s. This was pursued originally by polymaths attempting to calculate annuity payments, which brought to their notice statistical patterns and regularities in the census data for deaths and births. It was as a by-product of such endeavors that ‘population’ began to be regarded as an object in its own right, with its own regularities, dispositions, and lawlike features.

Before the appropriate mathematical tools of abstraction were in place, no one had consistently thought of humanity in this way, at the level of an aggregated population or as a global mass. Only after these first steps in new methods for assessing statistics did the previously invisible entity now called ‘population’ solidify as a target for objective investigation. And this meant, of course, that its dynamics were suddenly capable of being mathematically retrodicted and predicted.

With this consolidation of human population as a scientific object, there, of course, came new avenues for exerting power over populations (see Michel Foucault’s “Security, Territory, Population”). But what was also brought into focus was a new unit of potential perishing. Not just individuals could die, but entire populations. Ironically, the computations of risk that had first made population visible to us simultaneously forced us to acknowledge that it was itself subject to risk.

Tellingly, one of the very first texts to engage in demographic ideas — written by the political thinker Baron de Montesquieu in 1721 — was also one of the first to mention human extinction as a plausible natural event. However, Montesquieu was most afraid of extinction by way of depopulation. In his “Lettres persanes,” he declares that global population has diminished since Antiquity, writing that, “[a]fter doing calculations as exact as possible,” he has ascertained that our “population continues to diminish daily, and if this trend persists within ten centuries the earth will be nothing but an uninhabited desert.”

Aside from the realization that ‘calculations’ can be applied to predict the long-term future of the human population, the rise of demography proved a crucial factor in the discovery of human extinction because it began cementing humanity’s awareness of itself as a biophysical species — something consecrated in Carl Linnaeus’s inclusion, from 1735 onward, of the genus Homo in his taxonomic system. Indeed, it was during this century that the phrase ‘the human species’ entered regular parlance. And with this taxonomic self-awareness comes awareness of the possibility of humans dying out as a species.

With taxonomic self-awareness comes awareness of the possibility of humans dying out as a species.

Numerous other treatises on demography across the ensuing century echoed Montesquieu’s concerns. In 1754, David Hume responded directly to the latter in an essay on ‘populousness’ where he pronounces that Homo sapiens, just like all other species, will eventually undergo extinction. Decades later, William Godwin, whose bullish optimism about humanity’s future abundance had provoked Malthus’s original warnings, wrote that the burgeoning field of population science had provided a rich arena for prognostications upon “the extinction of our species.”

Malthus’s ideas have not fared well. Malthusian concerns resurged in the 1950s and 1960s. But around that time, the rate of growth for global population began declining. Indeed, due to education, birth control, and rising prosperity, there has been what some experts describe as a ‘jaw-dropping’ decline in birth rates across the world. Some even argue falling fertility could lead to human extinction.

Whether the concern is overpopulation or infertility, population science was from the start intertwined with probabilistic thinking surrounding humanity’s fate. In 1750, one French mathematician remarked that a comet, hitting the Earth, would shatter it “from top to bottom.” Soon after, his compatriot, Jérôme Lalande, calculated that, even if a comet’s orbit intersects with Earth’s, there is still only a 1 in 76,000 chance of the two bodies actually colliding. By 1810, probability theory was properly brought to bear on the issue, when German astronomer Wilhelm Olbers calculated that a comet hits Earth roughly every 220 million years.

Though the odds were low, the possibility remained. The local universe was now a sea of roaming risks that must perpetually be navigated, probabilistically and predictively. And, as it became more obvious that every moment is potentially a dice roll for our species (where to ‘win’ is merely to earn another chance to roll the dice), it also became clear that ignorance itself is a risk, and one that can likewise be measured with numbers.

Subjective Probability

Aside from assessing the objective probability of planetary catastrophes based on the known amount of comets in our vicinity, another interpretation of probability had long been quietly growing in the shadow of more obvious alternatives: As opposed to measuring the tangible frequencies of events observed, this alternative conception centered upon the strategic position of the observer herself. This was the idea that probability doesn’t measure the likelihood of things happening in the world, but our confidence in our beliefs about those things happening.

The mathematics of probability and calculus have allowed humans an ever-greater grasp over the future and its risks.

Dubbed subjective probability by later theorists, it involved interpreting probability not as an objective frequency but as a degree of credence in a subjective belief. So, the objective probability that I am a liar invariantly remains 0 or 1 based on whether or not I told a lie, but the subjective probability relating to your opinion on the matter will vary as you weigh up your opinion and are presented evidence for and against.

To further illustrate this, think of an urn containing 70 blue balls and 30 red balls. The objective probability of picking a red ball from the urn is 30 percent. However, prior to any trials, I can only have a subjective probability: If I am told that the urn contains some red and blue balls, it may be wise to guess that the probability of selecting either way is 50 percent, but, as I pick out more balls, I can update this figure and, after enough trials, I can figure out the objective probability from past experience.

Now think of a much bigger urn that contains an unspecified number of white balls and an unspecified number of black balls. Taking out a black ball causes not only my death, but the extinction of the whole human species and all records of it. Clearly, no one has ever picked out a black ball. It is not an occurrence that has ever entered the ‘experience of the ages’; but this does not mean it isn’t there in the urn. Therefore we can only use subjective probabilities to reason about it. This example illustrates why the development of ways of thinking about subjective probability has proven important for the study of existential risk.

For example, noticing that the universe is mostly silent and sterile implies that something stops inorganic matter from evolving into spacefaring civilizations. Something obstructs progress. But when does this take place? Is it behind us or waiting in our future? Perhaps it is behind, and the emergence of life is massively improbable. This, however, would mean that discovery of basic life elsewhere would be bad news. It would revise upward our subjective belief that the major challenge lies ahead.

The first person to create a mathematical rule for this kind of reasoning was Reverend Thomas Bayes in the 1750s. Writing of our need for a rule of assigning probabilities where we “absolutely know nothing antecedent to any trials,” he created a theorem that instructs how to apportion subjective likelihoods and then update them when we gain new evidence. Bayes was at the time responding to a highly abstruse and academic problem among probability theorists. He was not thinking about existential peril. But with his method for assigning subjective probability, or degrees of belief, Bayes bequeathed to later ages a theorem that could be used to reason usefully upon those ‘unknown unknowns’ or ‘wildcard risks.’

Modernity enabled us not only to translate nature into numbers but also to bring the rigor of mathematics to our own opinions. This bequeathed numerous pathbreaking abilities: to compute a comet’s path, to comprehend humanity as a global mass, and to track the uncertainty of our own beliefs. From the 1600s onward, this has allowed a long future prospect to slowly come into view, by giving us the tools by which to map out its perils and its promises.

Across history, acknowledging what’s at stake in the future has caused us to pay more attention to it. Unsurprisingly, it was predictions on population that inspired recent concern for existential risk. Since the 1980s, philosophers like Derek Parfit have pointed out that human extinction would be uniquely bad for reasons of wasted opportunity as well as loss of life. Because extinction would be the loss of all potential future generations in addition to the perishing of however many billions of individuals are presently alive.

But how many future generations could there be? Remember that Malthus himself acknowledged that it is within the power of population to “fill millions of worlds.” Experts today now think of this (and much more) as achievable. This means there could be unimaginable amounts of future people, leading highly valuable lives. And this gives us some sense of just what’s at stake in our survival.

Thomas Moynihan is a UK-based writer and a visiting research fellow at Cambridge University’s Centre for the Study of Existential Risk. He studies the history of ideas, particularly how changing conceptions of the cosmos have altered human self-conceptions and practical priorities through the past, as people have progressively gained their bearings within wider ranges of space and time. His most recent book is “X-Risk: How Humanity Discovered Its Own Extinction,” from which this article is adapted.