A History of Cryptography From the Spartans to the FBI

When Operation Trojan Shield concluded on June 8, 2021, the results were staggering: Over 800 arrests were made across 16 countries, and nearly 40 tons of drugs were seized, along with 250 guns, 55 luxury cars, and more than $48 million in currencies and cryptocurrencies.

At the core of the sting — one of the largest of its kind — was a proprietary messaging app called ANOM. The app, marketed as a secure, encryption-based communications platform, offered features beyond those of ordinary devices, such as the ability to remotely wipe all messages and data from a captured phone, effectively destroying all incriminating evidence.

The problem for users was that ANOM was run by the FBI. Its privacy protection mechanisms were a façade: All communications were copied and relayed to participating government agencies. According to Europol, the EU agency for law enforcement, 27 million messages were collected from more than 100 countries.

This illusion of secrecy and privacy in communications reflects the deeper role of cryptography in our modern digital world. The operation highlights both the power and vulnerabilities of encryption, which has been central to secure communications for centuries. Yes, centuries. Cryptography, the art of encoding and decoding secrets, dates back to ancient Greece.

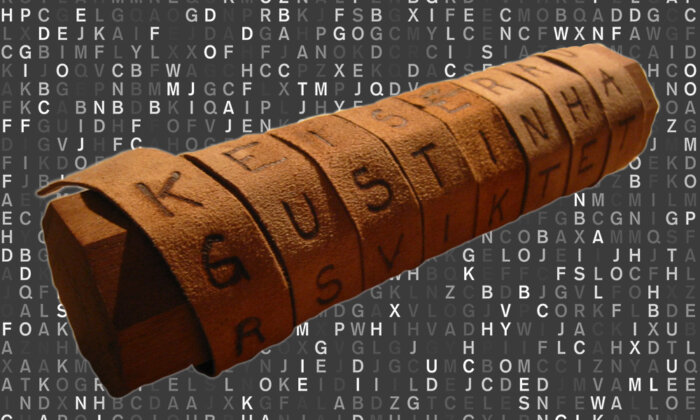

The term itself comes from the Greek word for “hidden writing,” and its origins trace back to the Spartans. According to Plutarch, they used a tool called a “scytale” or “skytale” (from the Greek σκυτάλη, meaning baton or cylinder) to hide their messages. The tool consisted of a rod around which a strip of leather or parchment was wound. The message was inscribed on the strip when wound on the scytale, and then sent without the rod. To read the message, the recipient would need a scytale of the same shape; otherwise the letters read gibberish.

The Romans, too, utilized cryptography. About Julius Caesar, the first-century historian Suetonius wrote:

If he had anything confidential to say, he wrote it in cipher, that is, by so changing the order of the letters of the alphabet, that not a word could be made out. If anyone wishes to decipher these, and get at their meaning, he must substitute the fourth letter of the alphabet, namely D, for A, and so with the others.

Suetonius was describing the Caesar cipher, a type of substitution cipher, meaning that it works by substituting letters of the alphabet with other letters of the alphabet. To read a message encrypted with the Caesar cipher you need to know the key — the number of positions each letter has been shifted (in the above case, four). If you do not know the key, you can try different keys until you come up with the right one. At most, you would need to try 25 different shifts in English.

A better option might be to shuffle the letters of the alphabet and decide to substitute, say, D for A, C for B, and so on. To decrypt that message, we would just need a mapping table:

One might attempt to try all possible mapping tables, but it would quickly prove futile. There are over 400 septillion different mapping tables — a number with 27 decimal digits!

When we try to break an encryption method or read an encrypted message by trying all different possibilities, we are using a brute-force method. Brute force is rarely the best (or most efficient) method, however. A fundamental decryption method — and a more effective one — is called frequency analysis, first described by the ninth-century Arab scholar and scientist Abu Yūsuf Yaʻqūb ibn ‘Isḥāq aṣ-Ṣabbāḥ al-Kindī (801–873), also known as al-Kindi. All languages have regularities, and in all languages, letters appear with specific probabilities. In English, the most common letter is E; the second most common is T; the least common letter is Z. So, the most frequent symbol in an encrypted text is likely to be E. The second most frequent symbol is likely to be T. And so on. That means that with some counting and a little guesswork, we can work around brute force and decrypt the message.

This cat-and-mouse game has defined cryptography for centuries. A cryptographic method is invented and considered secure, only for an attack to later break it. After some time, a better method is invented that is considered invulnerable to known attacks. And the cycle continues.

This evolution in cryptographic techniques has driven the development of increasingly sophisticated methods. One such advancement is the move from monoalphabetic ciphers, like the substitution cipher described above, to polyalphabetic ciphers, which use multiple substitution rules to strengthen encryption.

To encrypt a message using a polyalphabetic cipher, we use not one but several mapping tables. We encrypt the first letter using the first mapping table, the second using the second mapping table, and so on, until the last mapping table; then we cycle through the tables again. If the mapping changes, then the same letter will not always be encrypted in the same way: At some point in the message, A may be mapped to K, at another part to X, for example. That means that straightforward frequency analysis will not work.

An evolved polyalphabetic cipher, the Vigenère cipher, remained unbroken for three centuries until 1863.

Indeed, an evolved polyalphabetic cipher, the Vigenère cipher, named after Blaise de Vigenère (1523–1596) but actually invented by Giovan Battista Bellaso in 1553, remained unbroken for three centuries until 1863. It became known as le chiffrage indéchiffrable (“the indecipherable cipher,” in French). The Vigenère cipher was eventually broken by the German infantry officer, cryptographer, and archaeologist Friedrich Kasiski, who realized that frequency analysis could be applied by identifying the number of different mappings used, allowing separate analysis of message segments encrypted with the same mapping.

But the culmination of the cat-and-mouse game in classical cryptography was the Enigma machine, used by the Germans in World War II. The Enigma was a formidable polyalphabetic cipher; it took years of effort from Polish and British cryptanalysts, and the genius of Computer Science pioneer Alan Turing, to break it. The story has become the stuff of lore. Enigma was a watershed: The cryptanalysis was carried out by mathematicians, not by linguists or puzzle hobbyists. To break the encryption of the Enigma, Alan Turing designed another machine, called Bombe, now painstakingly reconstructed at Bletchley Park, where it was originally built during the war. It took a machine to break a machine. And this is how it would be from that point on.

Today, a cipher that underpins much of our digital infrastructure is the Advanced Encryption Standard (AES), developed by Belgian cryptographers Joan Daemen and Vincent Rijmen and adopted by the U.S. National Institute of Standards and Technology (NIST) in 2001 after a five-year open process evaluating competing proposals.

AES works by repeatedly substituting and permuting characters in a text leveraging mathematical principles, in particular number theory, the branch of mathematics that deals with the study of integers. Without the correct key, decrypting an AES-encrypted message is virtually impossible. As a symmetric cipher, AES uses the same key for encryption and decryption, like the other ciphers we have discussed. However, this symmetry poses a problem: The sender and receiver must exchange a key, and they cannot encrypt the key itself, as that would require another key. So, how does it work?

In 1976, American cryptographers Whitfield Diffie and Martin Hellman published a paper that changed cryptography forever. In that paper, they described the Diffie-Hellman key exchange, a method that enables two parties to agree on a shared key securely without meeting or private communication.

There’s a popular and helpful analogy that replaces math with colors to illustrate how the Diffie-Hellman key exchange works: Suppose Alice and Bob agree on a common base color, say yellow. Separately, each chooses a secret color and mixes it with their yellow. Alice sends her pot to Bob, and Bob sends his pot to Alice. Upon receiving the other’s mixture, each person adds their own secret color. This final mix results in the same shade for both Alice and Bob. A third party would know the starting yellow and the first mixed colors, but they wouldn’t be able to reverse-engineer the final color.

In reality, Diffie-Hellman doesn’t use colors but very large numbers. Its security depends on how hard it is to reverse the process — just as one can’t easily separate paint colors once mixed, an eavesdropper can’t feasibly compute the shared secret key without knowing the private numbers. At least, this is what cryptographers believe. It has not been mathematically proven that it is impossible to derive the key (like de-mixing the colors). That would require solving the so-called discrete logarithm problem. Currently, no efficient method is known for solving this problem, and it is widely believed that none will be discovered. That is typical of the situation in modern cryptography: We are confident that a cipher is secure because its security boils down to solving a mathematical problem, for which, despite our best efforts, no solution currently exists.

Another breakthrough came in 1978 when three researchers, Ron Rivest, Adi Shamir, and Leonard Adleman, created what they called the RSA cryptosystem (combining the first initials of all their last names), ushering in public key cryptography. In a public-key cryptosystem, there is one public key and one corresponding private key. We can use the public key to encrypt a message, which then can only be decrypted by the private key. As the public key is not secret, if Alice wants to send a secret message to Bob, she gets his public key over any convenient, public channel and then encrypts the message. The security of RSA encryption relies on the difficulty of decomposing an integer into a product of smaller, indivisible parts, specifically prime numbers. For example, 60 = 2 x 2 x 3 x 5. This process, known as integer factorization, cannot be carried out fast for big numbers, and in cryptography we use really big numbers.

Even though public key cryptography opened up new horizons in the field, symmetric cryptography is far from obsolete, as it is significantly faster. In practice, public key cryptography is often used for key exchange — such as in the Diffie-Hellman protocol — allowing two parties to securely establish a shared secret key, which they then use for efficient symmetric encryption.

Apart from encrypting messages, public key cryptography allows us to create digital signatures. If Alice encrypts a message with her private key, then the message can only be decrypted by someone with her public key. This ensures authenticity, as only Alice could have encrypted the message using her private key.

Key exchange and symmetric encryption happen all the time on our digital devices. When we initiate a communication session, be it visiting a website or setting up a video or audio call, our device exchanges a secret key with the other party. Once this is done, our communication is encrypted with a symmetric encryption mechanism like AES. It all happens behind the scenes, and it is so fast that we don’t notice any latency. But without it, no digital communications as we know it would be secure.

Quantum computers, when they become practical, could tackle certain problems much faster than today’s machines, rendering RSA, Diffie-Hellman, and other systems insecure.

Of course, the cat-and-mouse game is far from over. Today, cryptography faces a looming challenge: quantum computing. Quantum computers, when they become practical, could tackle certain problems much faster than today’s machines, rendering RSA, Diffie-Hellman, and other systems insecure.

Quantum computing is not here yet; quantum computers are built, but they have not yet reached the size and capabilities to threaten our cryptographic tools. But that does not mean that cryptographers are idle. Work has started on post-quantum cryptography, that is, cryptographic methods that, as far as we know, will be invulnerable to attacks by quantum computers. Post-quantum cryptography aims not only to safeguard our privacy in a future with quantum computers but also to protect today’s data. Imagine, for example, a scenario where someone stores our current communications, intending to decrypt them when quantum computers become available. This tactic, known as store now, decrypt later, threatens to jeopardize secrets that must remain private in both the short and long term.

In the same spirit of openness that animated the development of AES, the development of post-quantum cryptography is an open process, with different proposals being evaluated for their adoption as standards by NIST. Cryptography’s evolution, after all, is a perpetual cycle of breakthroughs and setbacks, perhaps best encapsulated by Samuel Beckett’s dictum: “Try Again. Fail Again. Fail Better.”

Panos Louridas is Professor in the Department of Management Science and Technology, Athens University of Economics and Business. He is the author of “Real-World Algorithms” and “Algorithms” and “Cryptography,” both in the MIT Press Essential Knowledge series.