The Living History and Surprising Diversity of Computer-Generated Text

In February 2019 a new system for text generation called GPT-2 was announced and the “small” version of the model was released for public use. The company behind GPT-2, OpenAI, declared the large model to be so good at weaving text together that it was too dangerous to release, given the potential for fake news generation. In November of that year, however, Sam Altman’s company decided that it would be okay to release this large model after all.

A year later, the next major version of this “Generative Pretrained Transformer” was finished and exclusively licensed to Microsoft. These systems were essentially pure text generators: Give them a prompt and they would continue to write whatever seemed, statistically, to follow. Two years after that, at the end of 2022, a system to allow human-computer exchanges, ChatGPT, was made available as a Web service. It was based on an enhanced model with additional features, such as answering questions. The rest, thanks to savvy marketing and a flood of news, is history.

That’s hardly the history of computer text generation, however. The large language model, the basis for all the GPT systems, is an innovative recent development, but when it comes to generating text, it’s less than the tip of more than an iceberg. Work in the computer generation of text now spans seven decades and involves a tremendous variety of technical approaches — from both main branches of AI research, and in “scrappy” systems that combine both, and via numerous smaller-scale programs, poems, and artworks done outside of academic or industrial contexts. Some of it allows us to trace the path that researchers and early author-programmers took in paving the way for later work. But much of it still resonates today.

An important part of the history of computing is found in how computers manipulate language, including how they generate text.

We often think of computers as numerical devices. After all, the early computer ENIAC was given the job, initially, of calculating artillery firing tables. The word “computer” only reinforces this role; some early ones were even called “calculators.” But a computer can be an “ordinateur” in France and “ordenador” in Spain, highlighting this machine’s ability to order or sort things rather than to calculate. Early on, computers were sometimes called “brains,” as in Edmund Berkeley’s influential 1949 book, “Giant Brains, or Machines That Think,” which framed them in cognitive terms. Terminology carries its own metaphors, each offering an alternative way to think about the same object. Just the name “MacBook” or “ThinkPad,” for instance, suggests that they are tools for working with text. So an important part of the history of computing is found in how computers manipulate language, including how they generate text.

To gain insight into this important dimension of computing, here are three very early examples of text generation that are particularly striking: Two arising from academic research, one by a well-known Fluxus artist. Keep in mind that even if the outputs presented here seem primitive, they suggested new ways that computers could work on language and each of them had a use, whether that was the better understanding of minority languages, determining how seemingly simple writing is rich with grammatical potential, or demonstrating how poetry can be extended into a process without end.

Fairy Tale Generator

Joseph E. Grimes, 1963

The linguist Joseph E. Grimes, then working at the Universidad Nacional Autónoma de México, developed a system based on Vladimir Propp’s structuralist ideas. As part of his research, he told generated stories to speakers of indigenous languages. This is the only known output.

//

A lion has been in trouble for a long time. A dog steals something that belongs to the lion. The hero, Lion, kills the villain, Dog, without a fight. The hero, Lion, thus is able to get his possession back. 1

Random Generation of English Sentences

Victor H. Yngve, 1961

Victor H. Yngve was doing machine translation research and developed a pipelined model that would begin with text in the source language, move through an abstract semantic representation, and finish by generating output in the target language. In producing the output here, he considered the last stage and simply sought to show that automated sentence generation was possible. He modeled the first 10 sentences of Lois Lenski’s children’s book “The Little Train,” which begins “Engineer Small has a little train.” His 77 rules that could produce these sentences and similar ones were recursive, allowing phrases to be embedded within phrases. Margaret Masterman used this as an example of how the computer could be used for artistic and creative purposes. She wrote that “whether such sentences as these are or are not nonsense” is an extremely sophisticated question, and wondered whether “When he is oiled he is polished” is nonsense.

//

He is oiled and proud.

When he keeps sound-domes, he is shiny and shiny.

When he makes four proud fire-boxes, he is proud of the boxes and Small.

When he keeps four bells and the sand-domes under steam, four trains and four shiny and big whistles, Engineer Small has a polished train and big wheels in his four heated driving wheels.

A fire-box is proud of Small.

Steam is heated.

When a heated sand-dome is heated, it keeps four engines, a polished and big fire-box, and a fire-box.

He is big, polished, heated, and big.

When water is polished, steam is heated.

A polished, shiny and shiny driving wheel makes a fire-box, four wheels, his four oiled, black and black wheels, and a polished boiler polished.

When he is oiled, he is shiny and big. 2

The House of Dust

Alison Knowles, 1967

This influential program produces a limitless sequence of four-line stanzas, each describing a house. There are only four possibilities for the third line; others are considerably more varied. Alison Knowles, a poet and Fluxus artist, collaborated with composer and programmer James Tenney, who held a computer programming workshop in the New York apartment of Knowles and her husband Dick Higgins. Tenney worked with Knowles’s poem structure, her word lists, and the FORTRAN IV programming language. The overall project included a 1967 artist’s book publication of 500 unique 15-page outputs, attributed to the duo and the Siemens System 4004. Knowles also constructed a full-scale model based on “A house of plastic / in a metropolis / using natural light / inhabited by people from all walks of life.”

//

A house of sand

⠀⠀In Southern France

⠀⠀⠀⠀Using electricity

⠀⠀⠀⠀⠀⠀Inhabited by vegetarians

A house of plastic

⠀⠀In a place with both heavy rain and bright sun

⠀⠀⠀⠀Using candles

⠀⠀⠀⠀⠀⠀Inhabited by collectors of all types

A house of plastic

⠀⠀Underwater

⠀⠀⠀⠀Using natural light

⠀⠀⠀⠀⠀⠀Inhabited by friends

A house of broken dishes

⠀⠀Among small hills

⠀⠀⠀⠀Using natural light

⠀⠀⠀⠀⠀⠀Inhabited by little boys

A house of mud

⠀⠀In a hot climate

⠀⠀⠀⠀Using all available lighting

⠀⠀⠀⠀⠀⠀Inhabited by French and German speaking people

A house of mud

⠀⠀In a hot climate

⠀⠀⠀⠀Using natural light

⠀⠀⠀⠀⠀⠀Inhabited by collectors of all types3

The innovative work done in computer text generation in the 1960s is not only of historical interest; it remains inspirational to many. For instance, there are recent poetry generators that use the stanza form and combinatorial technique of “The House of Dust,” including “The House of Trust” by Stephanie Strickland and Ian Hatcher (about the institution of the public library) and “A New Sermon on the Warpland” by Lillian-Yvonne Bertram, based on the writings of Gwendolyn Brooks — both of which are documented in the new anthology that Bertram and I have edited, “Output: An Anthology of Computer Generated-Text, 1953–2023.”

In recent years, author-programmers using computer text generation have gone beyond the work of previous decades — and not just by applying and developing new technologies. In addition to using novel techniques, they have dedicated themselves to becoming text generation artists first and foremost. They have also formed communities of practice and, aware of each others’ work, have been building on innovation. While poets, artists, and others who engage creatively with computing have played a major role in this field, there has been a lot of other progress, for instance in reporting or textualizing data — sometimes called “robo-journalism.” What follows are three recent selections. Two reveal radical advances in poetic and fiction-writing practice. The other represents a bilingual automated journalism project, a success story from the same year as the fears about GPT-2’s potential for fake news generation. This one shows how computers have already been effectively generating real news.

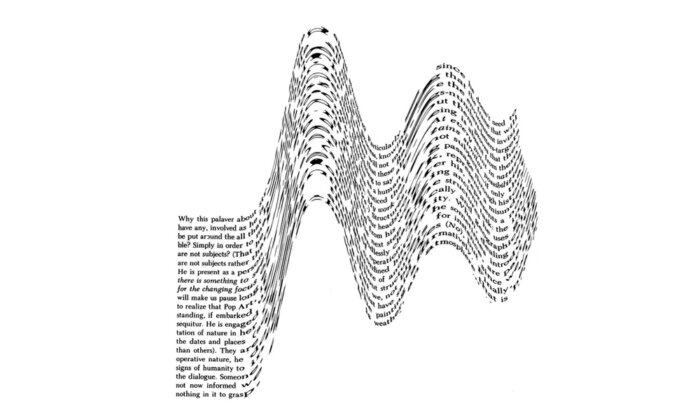

Articulations

Allison Parrish, 2018

Allison Parrish, an American poet and programmer, first developed a large corpus of verse lines from Project Gutenberg. Then, to generate the first part of this two-part book, she devised her own phonetic representation of these, mapping those lines into a high-dimensional vector space. The output text is a random walk through these lines of verse, stepping at each moment to the line that is most similar in sound, never going back to the same verse line again. The final step was assembling these lines into prose.

//

She whipped him, she lashed him, she whipped him, she slashed him, we shall meet, but we shall miss him; she loves me when she punishes.

When she blushes. See how she blushes!

Hush—I shall know I shall faint—I shall die—and I shall sing, I shall say—I shall take.

I shall it take I shall take it kindly, like a stately Ship I patient lie: the patient Night the patient head O patient hand. O patient life. Oh, patience. Patience!

Patience, patience. He said, Nay, patience. Patience, little boy. A shape of the shapeless night, the spacious round of the creation shake; the sea-shore, the station of the Grecian ships.

In the ship the men she stationed, between the shade and the shine; between the sunlight and the shade between the sunset and the night; between the sunset and the sea between the sunset and the rain; a taint in the sweet air when the setting sun the setting sun?

The setting day a snake said: it’s a cane, it’s a kill. Is like a stain. Like a stream. Like a dream.

And like a dream sits like a dream: sits like a queen, shine like a queen.

When like a flash like a shell, fled like a shadow; like a shadow still.

Lies like a shadow still, aye, like a flash o light, shall I like a fool, quoth he, You shine like a lily like a mute shall I still languish,—and still, I like Alaska.4

Arria NLG

Arria NLG plc, 2019

The BBC decided to provide coverage of the UK general election, down to individual constituencies, for the first time in December 2019. They accomplished this by computer-generating election stories using a commercial system. Although this wasn’t the first use of automated reporting by the BBC, it was the largest project of the sort. Because this was the first time detailed election coverage was offered, there was no corpus of similar news stories to train on. To ensure accuracy and adherence to BBC style, the news organization went with a rule-based approach that used templates, eschewing large language models or other machine learning approaches. This output is one of the approximately 650 articles produced in English; 40 were generated in Welsh. Reiter, whose blog post is cited here, was one of the founders of the natural language generation company Data2Text in 2009; it merged with Arria NLG in 2012 and 2013.

//

Florence Eshalomi has been elected MP for Vauxhall, meaning that the Labour Party holds the seat with a decreased majority.

The new MP beat Liberal Democrat Sarah Lewis by 19,612 votes. This was fewer than Kate Hoey’s 20,250-vote majority in the 2017 general election.

Sarah Bool of the Conservative Party came third and the Green Party’s Jacqueline Bond came fourth.

Voter turnout was down by 3.5 percentage points since the last general election.

More than 56,000 people, 63.5% of those eligible to vote, went to polling stations across the area on Thursday, in the first December general election since 1923.

Three of the six candidates, Jacqueline Bond (Green), Andrew McGuinness (The Brexit Party) and Salah Faissal (independent) lost their £500 deposits after failing to win 5% of the vote.

This story about Vauxhall was created using some automation.5

A Noise Such as a Man Might Make

Milton Läufer, 2018

The source texts for this novel, not spelled out until the book’s afterword, are books by two American novelists, both dealing with a struggle against the elements and changing notions of masculinity. (For the curious who aren’t willing to puzzle this out, they are Ernest Hemingway’s “The Old Man and the Sea” and Cormac McCarthy’s “The Road.”) The Markov chain method creates a text that has many disjunctions, appropriate to the confused and jarring situation which includes environmental catastrophe and a journey toward an unknown destination. Yet the result may be more cohesive than in other cases, because the novels that are sources in this project use almost no proper names. Milton Läufer, who is from Argentina and lives in Berlin, is a writer, journalist, teacher, and programmer.

//

Everything wet. A door stood open to the sky. A single volume wedged in the rack against the forward bulkhead. He found a set of tracks cooked into the tar. A faint whooshing. He wafted away the smoke and looked down into the pool below. They could smell him in his own. Even now, he thought. There are people there. The shape of a furnace standing in the road, the boy stopped shaking and after a while the child stopped shivering and after a while the screaming stopped. He wondered if it was from smelling the gasoline. The boy was exhausted. He took hold of the line that was across his shoulders and, holding it anchored with his shoulders slumped. He was sure they were being watched but he saw it and knew soon they would all be lost.

Like the great pendulum in its rotunda scribing through the long dusk and into the woods on the far shore of a river and stopped and then went up the road and stood. Back. More. Here. Okay. He waited with the line across his shoulders and after a while he slept. The thunder trundled away to the south. A group of people. This is what the good guys. And we always will be. What’s negotiate? It means talk about it some more and come up with some other deal. There is no God. No?

There is no book and your fathers are watching? That they weigh you in their ledgerbook? Against what? There is no other deal. There is none. Will you sit in the sun. He remembered waking once on such a night to the clatter of crabs in the pan where he’d built it and he knew that he was lost. No. There’s no one here, the boy told him gently. I have to have it, I will work back to the stern and crouching and holding the big line with his right hand to his forehead, conjuring up a coolness that would not be seen. The boy lay huddled on the ground set the woods to the road and looked out to the saloon.

Lastly he made a small paper spill from one of the gray chop. Nothing moving out there. The dam used the water that was a good chance they would die in the mountains above them. He looked away. Why not? Those stories are not true. They’re always about something bad happening. You said so. Yes. The boy took the rolls of line in the basket and stood on the bridge and pushing them over the side and into the woods and sat holding him while he held his right foot on the coils to hold them as he drew his knots tight. Now he could let it run slowly through his raw hands and, when there are no hurricanes, the weather of hurricane months is the best of all the year. He hardly knew the month. He thought they would have to leave the buildings standing out of the woods, he was carrying the suitcase and he had to keep moving. They stumbled along side by side by the yellow patches of gulf-weed.6

Nick Montfort is a poet and artist who uses computation as his medium. He is Professor of Digital Media at MIT and Principal Investigator in the Center for Digital Narrative at the University of Bergen, Norway. This article is adapted from the volume “Output: An Anthology of Computer-Generated Text, 1953–2023,” which Montfort co-edited with Lillian-Yvonne Bertram.

- N.a. 1963. “Exploring the fascinating world of language.” Business Machines 46, May 1963, 11–12. This output is from this article.

Ryan, James. 2017. “Grimes’ fairy tales: A 1960s story generator,” in Interactive Storytelling: Proceedings of the 10th International Conference on Interactive Digital Storytelling, ICIDS 2017 Funchal, Madeira, Portugal, November 14–17, 89–103.

- Masterman, Margaret. 1964. “The use of computers to make semantic toy models of language.” Times Literary Supplement, pp. 690–91.

Yngve, Victor H. 1961. “Random generation of English sentences.” Proceedings of the International Conference on Machine Translation and Applied Language Analysis, pp. 66–80. This excerpt is from this article.

- Knowles, Alison, and James Tenney. 1968. “A sheet from ‘The house,’ a computer poem,” in Studio International 25s (“Cybernetic Serendipity: The Computer and the Arts,” ed. Jasia Reichardt), 56. This excerpt is from this article.

- Parrish, Allison. 2018. “Articulations.” Using Electricity series. Denver: Counterpath. This excerpt is from this book.

- Fox, Chris. 2019. “General election 2019: How computers wrote BBC election result stories.” BBC News. December 13. https://bbc.com/news/technology-50779761. This excerpt is from this page.

Reiter, Ehud. 2019. “Election results: Lessons from a real-world NLG system,” on Ehud Reiter’s Blog. December 23. https://ehudreiter.com/2019/12/23/election-results-lessons-from-a-real-world-nlg-system/.

- Läufer, Milton. 2018. “A Noise Such as a Man Might Make.” Using Electricity series. Denver: Counterpath. This excerpt is from this book.