Notes From the Chaos Communication Camp

The Chaos Communication Camp happens every four years. The trouble is, every four years its attendance seems to double. This year the group that organizes the camp, the Chaos Computer Club, is struggling to accommodate 4,500 camping hackers.

The camp is being held in the German countryside at the site of a large 19th-century factory that once supplied Berlin with its terracotta roof tiles. I make it there by train and local bus on the second day of the event, and late that night pitch a tent in the middle of a spectacular lightning storm. The electrical grid that snakes through every part of the camp’s 20 odd acres withstands the deluge of water: There are no fires or electrocutions. Only the inside of my tent gets wet; there’s a vent at the top I can’t find the cover for.

I am not much further ahead in preparing my laptop’s security. I have failed to install Tor and am still woefully ignorant of many basic aspects of security. I have a dilemma to face the next day, too. If I am not up to practicing high spycraft, if I have not mastered even a basic privacy tool like Tor, is it fair for me to try to interview the “Berlin exiles” and other high-profile hackers I expect to meet at the camp?

Dawn finds me hunched stiffly over a hot coffee served by one of the food kiosks that is, surprisingly, open at this hour. I look up when a slight, bearded young man, coffee in hand, sits down with a nod at the same picnic table. Minutes go by, and it occurs to me to ask him where I might charge my laptop. I ask also if he knows anything about the special internet access the Chaos Computer Club encourages campers to use. I’m a lawyer, not a tech person, I tell him apologetically. I’m trying to be more secure, I explain, but it’s my professional ethic as a lawyer to act openly. When I try to act covertly, I get confused.

“Yes, well,” he responds, “one gets confused in all kinds of political processes, not just technological ones.”

He smiles warmly. He has a quiet, thoughtful demeanor.

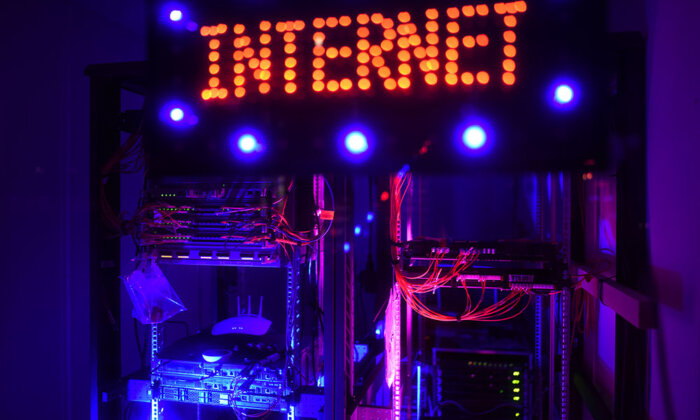

“But,” he says categorically, “in a place like this, you just don’t go on the open internet.”

It may not be possible to make a whole system secure, hacker Christian Heck tells me, “but you have to do your best.”

He explains why: There are many hackers, and they love to hack into things. They can scan and see you anytime they like. Yes, they can get into your email. Yes, also your documents. Anything is possible. He smiles again. Yes, there are also security agencies that send people to this kind of conference, certainly.

He sees me blanching. Before coming here, I explain, I installed PGP, a non-tracking browser, and an activist-run email service on my laptop, more for credibility’s sake than from paranoia. But when I returned to the camp late yesterday, I needed to check in with my kids. I’d had to leave them on their own back in Canada, and they are only in their early teens. I joined the net using the camp’s open WiFi connection. In my specific circumstances, how bad could that be? My brain churns ineffectually trying to sort through the implications.

If you have a free software system like Linux, he says (I notice he does not say GNU/Linux, as Richard Stallman would wish he had), you can see the source code and watch over it all the time. You can see if it has been infected. With free software for encryption, it is the same thing: you can check to see if it is doing what it is intended to do. You have to watch over this all the time. People are at risk because of social media and commercial websites. There are many ways of getting your computer infected with tracking technology.

We talk some more, and I learn that he is an artist. He gave a talk on “glitch art” at the camp yesterday. He explains that this involves working with technical representations and finding errors in code to display aesthetically. For example, in the presentation he gave yesterday, he produced errors in images to make visible the glitches in a sequence of code from a U.S. drone system. He started this project three years ago, when he came across a leak by a group called DefenseSystems.com. The leak was that a drone operator did not know a video system for U.S. drones had errors in it, which led him to kill civilians when directing a drone attack in Pakistan.

Another thing he does are “PGPoems”: He plays with code and network language to create partly encrypted poems. PGP, as I know, stands for software engineer Phil Zimmermann’s public key encryption tool called Pretty Good Privacy. The reader of a PGPoem can see some words but has to do a public encryption key exchange with the artist in order to put together the whole thing.

It’s still early. Most people in the camp have yet to emerge from their tents. I can hear mourning doves calling softly to each other in a stand of silver birch trees. We huddle over the picnic table, our cups of coffee steaming our fingers warm.

You need an alternative browser like Jondo or Whonix, and you must also think about the default settings you configure for them.

He pulls a small yellow book out of his backpack and hands it to me. Titled “Operational Glitches: How to Make Humans Machine Readable,” it is an artistic treatment of the computer as a control system over time, he says — say, by biometrics or metadata index. It is an essay on code.

I turn the booklet over in my hands, a small artefact of the interesting times we live in. The text is in German, with headings like “Die falschheit des glaubens an die richtigkeit technischer bilder” (the falsity of faith in the accuracy of technical images), “Frabe by frame by frame” (error by frame by frame), “predictive killing,” “Produkttionsmittel” (means of production), and “das Codicht” (code). It is full of recognizable names like Heidegger and Marx. I look up into the artist’s clear gray eyes and he reminds me also of Schiller and Goethe.

Who is this soulful German? His name, the booklet cover reveals, is Christian Heck.

Christian feels at risk as an artist not because he is disclosing secrets — that, he says, he does not do — but because he is making statements that authorities do not agree with. He knows they will monitor him. He also has a responsibility to friends, colleagues, and family. He never goes online unencrypted. Also, because pictures are full of metadata, revealing information such as time and place, he erases metadata so as not to leave traces: “Not just for me, but for all groups.” There are many security methods for being anonymous online. He never uses Gmail, for example, because Google analyzes your email with that service: “Many people say, ‘I don’t want to be used as a product by a private company.’”

Christian describes his involvement in spreading privacy know-how. He participates in a peer-to-peer teaching collective called “CryptoParty,” which is part of a grassroots internet privacy movement. When they organize a party, everyone can come, have a beer, and show one another how to encrypt a hard drive or email, use a Linux operating system, or work anonymously. (Later that day, I look up CryptoParty and find that the tech writer Cory Doctorow calls it “a Tupperware party for learning crypto.” The “CryptoParty Handbook” — over four hundred pages, “to get you started” — was crowdsourced by activists from all over the world in less than 24 hours.) Tactical Technology Collective is another interesting group, Christian says. It has a tent at the camp and does public education through animation. The group’s kit is called “Security in a Box.”

It may not be possible to make a whole system secure, “but you have to do your best,” he says, looking at me kindly. “Just like a mother.”

He explains all the various pieces you have to worry about in a security plan:

- Your hardware or Mac address. This is your unique numeric computer identifier. If it is visible — as it is on an “open” network like the open WiFi connection at the Chaos Communication Camp — it identifies you like a fingerprint.

- Your web browser. Popular web browsers include Firefox, Internet Explorer, and Safari. You need an alternative browser like Jondo or Whonix, and you must also think about the default settings you configure for them.

- Your default search engine. Most people have Google as their default search engine. You need a search engine that does not track you and does not keep a history of your browsing, like the alternative search engine Duck Duck Go.

- Your server. You need a network of proxy servers like Tor to hide your internet protocol address (from your router) and geographic location.

- Your internet service provider. You need one like Frei Funk (Free Send) that does not keep log files and therefore cannot track you going into Tor.

- Your operating system. You need one with free software like Linux (again, he says “Linux,” not “GNU/Linux”) so that you can see the source code and monitor whether it has been infected or not.

And there are two types of information to worry about:

- Your content. You should encrypt all your messages and the documents you send (OTR for chats and PGP for email and attachments). You should encrypt your hard drive with Looks or Truecrypt.

- Your metadata. You need a network of proxy servers like Tor to hide where you go and who you talk to. Tor does not encrypt the content of the message. Some people don’t understand this and inadvertently leave their content exposed.

The Tor network, Christian explains, hides your original location. With Tor, Google has only the metadata for your web search from the last proxy server (in Romania, say). Your location and your IP address are hidden. You do not need to trust Tor because it does not know what route your message took or its original location. “Hide My Ass,” another proxy server, does.

As Christian speaks, I am trying to square what he is telling me with what I already think I know about Tor. As I understand it, Tor is continually being improved. The way it currently works is that it uses two sets of PGP keys. Tor lays out a random path of multiple nodes every ten minutes, and one “temporary” key is sent to each node. The temporary key is for the substantive message that will be sent. If there are three nodes, a temporary key is sent to each of the three nodes. It is encrypted using each node’s public key — that is, Tor uses each node’s public key to scramble the temporary key’s information. Only the node can unencrypt the temporary key it has just been sent using its private key. The node stores the temporary key for a short time, ready to use it on the next message it will receive. The next message will be the sender’s substantive message with its content, encrypted or not, wrapped in three layers of encrypted addresses. The first node will open the first layer of the addresses with the temporary key it has in its possession. It will scrub the sender’s metadata and then send the message on to its next destination. The second node will do the same thing with the next address, and so on.

With Tor, Google has only the metadata for your web search from the last proxy server (in Romania, say). Your location and your IP address are hidden.

But you really must have a clean computer before you go into Tor, Christian continues. If your computer is infected with malware, he says, someone spying on you could potentially see your whole route through Tor and all your correspondents. Instead of hiding yourself on the internet, you would be exposing your most sensitive networks. It would be like wearing an invisibility cloak you thought was working while it was not. It could expose your whole social network and put all of your correspondents at risk. A clean computer means a computer that has not been exposed to a possible hack through the internet before you start using Tor to hide yourself. Commercial sites are risky, and so are streaming videos and looking at PDFs online. This means you have to preconfigure your browser offline when you first get your computer and avoid using it for streaming, web surfing, and PDF downloads.

This is beginning to sound onerous to me.

But even with a clean computer, Christian says, if you are using the same browser (say, Safari) all the time and always configured the same way, security agencies can create a profile on you when you are using Tor based on the constant pattern of your browser and its particular configurations. (I struggle to translate this for my own understanding: even with Tor hiding your location and IP address, authorities can still profile you at the ends of the rabbit tunnel if you are using the same browser and configuration all the time? As if they can see you have a brown salt-and-pepper tail going into the rabbit warren and a brown salt-and-pepper tail coming out?)

Cryptoparties over beer and pizza, Christian says, start with what people are interested in. They are meant to teach normal people with normal computers, people who may have no clue about security when they start. Then they learn to be more adept through many little talks, he explains.

Crypoparties are meant to teach normal people with normal computers, people who may have no clue about security when they start.

With his art, Christian says, he is searching for other ways to help people understand the dangers of “right now,” other than through logical argument. Every week, there is a news story about surveillance, and this does not change people’s behaviors online.

Christian himself learned to code because he wanted to make a movie using film cutting in real time. Adobe did not have that function, so he had to create it.

“But ordinary people have many things to do other than become technical experts,” I say.

“Yeah, I know,” he replies. “They have families and jobs from nine to five, et cetera, but it’s just another way of behavior. You changed from using a Microsoft system to a Mac and learned how to work it, so you can change from Microsoft to Linux, for example.” He smiles again, warmly.

Christian stands up, his coffee finished. We shake hands like friends, and I thank him for his lesson on the basic elements of user security. Combined with the primer Andrew Clement gave me before my trip, I feel much more knowledgeable about how things work.

As Christian walks away, a recent news story I read in The Telegraph pops into my mind. I hesitate for a moment, then look it up, still using the open WiFi connection:

Kremlin Returns to Typewriters to Avoid Computer Leaks

The Kremlin is returning to typewriters in an attempt to avoid damaging leaks from computer hardware, it has been claimed …

Documents leaked by Mr. Snowden appeared to show that Britain spied on foreign delegates, including Dimitry Medvedev, then the president, at the 2009 London G20 meetings. Russia was outraged by the revelations but said it had the means to protect itself.

I will, it turns out, have the opportunity to talk with at least two of Berlin’s digital exiles over the next few days at the Chaos Communication Camp. They are here, circulating, and it should be easy to approach them and perhaps set up interviews with other exiles, including Julian Assange in London, where I have arranged to go after Germany. But do I want to take on that burden?

For all of the reasons I have been mulling over, I decide not to. I cannot offer them security, and I do not want to become a target of surveillance myself. I have a regular job to hold down, a mortgage, and children. In any case, their stories are background to my main objective for my book “Coding Democracy” — reporting on the collective movement I see emerging around the hacker scene and the direction that hacking is headed in this difficult political era. The technical problems I’ve been having with my tent will soon recede in importance: I will have so many people to talk to I will barely have time to sleep.

Maureen Webb is a labor lawyer and human rights activist. She is the author of “Illusions of Security: Global Surveillance and Democracy in the Post-9/11 World” and “Coding Democracy: How Hackers are Disrupting Power, Surveillance, and Authoritarianism,” from which this article is adapted. She has taught national security law as an Adjunct Professor at the University of British Columbia.