“Hallucinating” AIs Sound Creative, but Let’s Not Celebrate Being Wrong

When Sam Altman, OpenAI’s CEO, recently claimed that hallucinations were actually a good thing, because in fact GPT’s strength lies in its creativity, some observers might have raised an eyebrow: We humans are rather good at creativity without getting our facts all wrong. How could such an appeal to creativity make a decent counter to the many concerns about accuracy?

To begin, what do people mean when they say an AI system “hallucinates”? Take this example of what happens when GPT4 tries its hand at academic citations:

Me:

Can you complete the title of the paper by Keith L Downing written in 2004, “Development and the …”GPT4:

Yes, the complete title of Keith L. Downing’s paper written in 2004 is “Development and the Baldwin Effect: Evolution by Any Other Name?” This paper delves into the Baldwin Effect and its implications for understanding evolution and development.

The part of the title “Evolution by Any Other Name?” is made up. As it happens, that is the title of another academic paper on “alternative models of biological evolution,” and so is very relevant, but very definitely wrong.

When people say GPT is hallucinating, they are referring to this kind of mangling of facts. But the idea of hallucination implies that at other times the facts have been accurately portrayed. Unfortunately, this promotes a misunderstanding of how large language models (LLMs) work, and misunderstanding how a technology works can make the difference between it being safe and dangerous. It might be better to say that everything GPT does is a hallucination, since a state of non-hallucination, of checking the validity of something against some external perception, is absent from these models. There is no right or wrong answer in their world, no meaning relating to goals.

That’s because LLMs are not models of brains, but of language itself, its patterns, structures, and probabilities. At heart their job description is incredibly simple: Given some text, they tell us what text comes next. It’s worth keeping front and center, however, that there is not always one right response. If I say “the tail that wags the …”, you might say the next word is “dog” with a high degree of certainty, but this is not the right and only answer. In any such context, there is much freedom, and the “rightness” of any answer depends not only on the conceptual context but on what you’re trying to do — your goal.

There is no right or wrong answer in their world, no meaning relating to goals.

As we barrel into the AI age, the issue of the accuracy of LLMs has triggered mild concern in some quarters, alarm in others, and amusement elsewhere. In one sense Altman was rightly deflecting interest away from any claim that GPT can convey accurate information at all. In another sense, he was layering one misconception with another in the implication that hallucination is key to creative capability. But since he mentioned it, what of GPT’s creative prowess?

It is certainly clear that a large part of the uptake of GPT has been for creative tasks, so what makes something creatively productive, and does this need to be at the expense of facts? Huge amounts of effort have been put into understanding how humans do creative things, and, as an important corollary, dispelling myths about creativity. This vast literature is reasonably unanimous about one crucial property of human creative cognition: that it involves the performance of a sort of search. As the creativity researcher Teresa Amabile most eloquently justifies, creative problems are by their definition those for which there is not a known solution, and by extension, necessitate “heuristic methods” for seeking those solutions where no “algorithmic” method will suffice. Aligning with the psychologist Dean Simonton, I believe this is well condensed into the idea of “blind search”, noting that “blind” does not mean “random.” Think of a radar scanning space; it moves systematically in a circle, traversing all possible points, but is nevertheless blind in its searching. In cognitive terms, blind search also necessitates evaluation, knowing what we’re looking for.

Several theoretical approaches to the psychology of creativity share the idea that human brains exhibit a capacity to perform a structured, distributed search, farming out a sort of idea generation, perhaps to subconscious modules, which are then evaluated more centrally.

But this is not only a process that happens inside brains: We do it collectively too. For example, the method of brainstorming was systematically developed to support divergent thinking, making overt recognized strategies for creative collaboration, and again identifying that heuristic methods of search are powerful for creative success. In a brainstorming session, each participant acts like one of those little, simple generative modules; two of the key brainstorming rules are to delay judgment, and to go for quantity over quality. Evaluation happens later in brainstorming, the point being that sometimes it closes our minds to fruitful possibilities.

That’s not to say there is no more to effective creativity than that: Both individual and social forms of creativity have many other important dimensions — mastery of a subject, the ability to learn from others, the ability to conceptually represent problems, and all the peripheral work that brings creative ideas to fruition.

But thinking about the sorts of structures that may support heuristic, distributed search helps us focus on effective architectures for creativity, above all recognizing that in simplest terms even the creativity of a single human being arises from the interaction between types of cognitive processes that generate and that evaluate.

I’ve argued that we might even tease out two flavors of the creative process altogether, specifically to help us understand how machines can play creative roles. “Adaptive” creativity is the behavior we typically associate with human intelligence. It is the full, integrated package of generation and evaluation. Meanwhile, a less discernible “generative” creative process is all around us. It is sometimes haphazard, sometimes more structured, but generally goalless. Within human brains, it might take the form of subconscious streams whirring away. But it even exists where there is no overt evaluation to speak of, just generating stuff, as in the basic goalless mechanism of evolution by natural selection (being mindful that “biological fitness” is not a goal but an outcome of evolutionary processes).

While classic “hero” stories of creativity concern the neatly bounded adaptive variety — this is the central myth of creativity — the wild generative form is actually more prevalent: accidental scientific discoveries, musical styles that arise from the quirks of a successful performer, solutions in search of a problem.

This is a very loose sketch, but it helps us immensely if we come back to thinking of GPT as a creative tool. Is it a good generator, a good evaluator, and can it put everything together into an adaptively creative package? GPT can superficially do a great job at both generation and evaluation; spawn some new ideas in response to a request, or critique something we input. We actually know a lot about the former: Many generations of older and less sophisticated generative systems have been used that way for some time — incapable of evaluating their own output, but generatively very powerful as a stimulant for creative people, as ideas machines. Sometimes the ideas are extraordinarily good, other times they’re mediocre, but that’s still a productive situation if this idea generation is situated within an effective creative assemblage: one involving a discerning human on evaluation duty.

It’s also worth noting that as a “mere generator,” GPT is better than most because it can do a good job of integrating context and is a whiz at handling concepts: Remember that productive creative search is blind but systematic, not random. Integrating context, and reinterpreting that context, is a critical way in which we can more systematically structure a creative search. Indeed, GPT works best at systematically integrating different constraints. Witness GPT rendering the proof of infinite primes in Shakespearian verse (this is my reproduction of an example given in the exhaustive study paper “Sparks of Artificial General Intelligence”).

Better still, GPT can evaluate things too, perhaps not in the simple terms of “this is good, that’s bad,” but via structured feedback that helps the user think through ideas. But, it is always the user who has to do the ultimate job of evaluation: You can tell GPT your goals, but it does not share those goals. It is actually just providing more generative material for reflection that the user needs to evaluate. In creative terms, GPT remains a generatively creative tool, a powerful one no doubt.

Yet, although GPT does not perform the full package of distributed creative search that humans are particularly good at, there are clear pointers to how close it might be to doing so. Watch GPT in its current “interpreter” version: Writing code, running that code on a server, and adapting its next step based on the results, and you can see how thin that gap might be wearing.

GPT is still a loose cannon for creative generation. Often the results are poor.

GPT does these things to a degree that far exceeds anything since, but it is still a loose cannon for creative generation. Often the results are poor. As colleagues and I hope to show in a forthcoming paper, building on existing work to understand the dialogic qualities of co-creative interaction, it shows little talent for grasping aesthetic goals, let alone interacting through dialogue with the user to best foster creative results. We remain open to whether, or exactly how, advances in these areas require radically different architectures, since GPT has surprised many thus far with what a “stochastic parrot” language model can achieve, exhibiting an emergent capacity for logic and understanding. But in the search for the full “adaptively creative” package, the issue of aligning with the user’s creative goals will turn out, I believe, to be absurdly complex and possibly a little bit sinister.

A recent example makes a striking case in point. A major supermarket created an app that would suggest original recipes to customers, based on the ingredients they had in their shopping basket. We might agree this is a charming idea for offering creative inspiration to break the mundanity of a daily grocery shop. The problem was that the generated recipes included dangerous and potentially deadly concoctions. As The Guardian reported: “One recipe it dubbed ‘aromatic water mix’ would create chlorine gas. The bot recommends the recipe as ‘the perfect nonalcoholic beverage to quench your thirst and refresh your senses’…. ‘Serve chilled and enjoy the refreshing fragrance,’ it says, but does not note that inhaling chlorine gas can cause lung damage or death.” “Tripping” might be a better term than “hallucinating” for such errors of judgment. In fact, it’s less that these are factual errors, but fall into a wider problem of groundedness in real-world concerns, death being a rather important one to us carbon-based lifeforms.

So what about hallucination? With creativity, as with any other use of GPT, such as signing off on a generated summary of a topic, the user is the ultimate arbiter. They must clearly understand that the language model is wired to make plausible predictions, not report accurate information nor share the user’s goals. But it is patently evident that human creativity and human attention to accuracy and truth are not mutually exclusive. Our brains can freewheel generative ideation without mistaking our imagination for fact. Thus while there is truth in the idea that creativity might benefit from temporary suspensions of disbelief, ultimately creative capability should not be a distraction from the expectations of accuracy. I don’t make any claim that later instances of GPT won’t “solve” hallucination in some way. Indeed, a version with integrated web search, being released imminently, may rapidly and effectively reduce instances of people being unknowingly served incorrect information. But for now it is critical that users understand the basis for GPT’s factual inaccuracy: Living in a world of word probabilities, conceptually sophisticated though it is, not of human concerns.

Perhaps the most important point of all, though, is that GPT is not an abstract academic experiment. It is big business, already out in the wild and driving many actors’ commercial ambitions. There are two points where this reality manifests a slightly different take on GPT’s capability.

The first is that, for reasons largely of safety, we see GPT being increasingly shrouded in input and output filters and pre- and post-prompts that tidy up the user experience. It is already a complex assemblage. Although the LLM part is often described as a black box, it is the stuff around it that is literally (socially) black-boxed, that we don’t get to see or understand. Our potential co-creativity with such machines is mediated in multiple hidden ways. Secondly, GPT is trained on millions of copyrighted texts; whether its use infringes on this copyright depends on the hotly debated issue of fair use under current copyright law in the U.S. and elsewhere. This works because GPT does not and cannot plagiarize significant chunks of creative material, and copyright laws cover specific instances of creative works, not general styles, which is what GPT is expert at reproducing. Generally, it cannot reproduce specific instances because it has no concrete record of the original sources.

As the makers of AI systems push the idea they are making creatively fertile tools, these multiple concerns and more are engaged in a complex dance: creative productivity; avoiding plagiarism; factual accuracy; safety; usability; explainability; energy efficiency; and profit.

Limitations aside, GPT can indeed be an incredibly powerful creative tool, best understood as a generatively creative system. But hallucination is a troubled term. It is critical that even creative uses develop with a clear-headed understanding of LLMs’ grasp on reality.

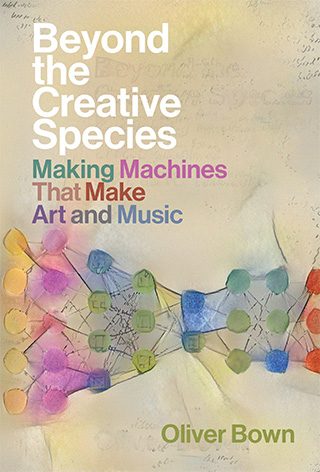

Oliver Bown is associate professor and co-director of the Interactive Media Lab at the School of Art and Design at the University of New South Wales. He is the author of the book “Beyond the Creative Species.”