AI Is No Match for the Quirks of Human Intelligence

At least since the 1950s, the idea that it would be possible to soon create a machine that was capable of matching the full scope and level of achievement of human intelligence has been greeted with equal amounts of hype and hysteria. We’ve now succeeded in creating machines that can solve specific fairly narrow problems — “smart” machines that can diagnose disease, drive cars, understand speech, and beat us at chess — but general intelligence remains elusive.

Let’s get this out of the way: Improvements in machine intelligence will not lead to runaway machine-led revolutions. They may change the kind of jobs that people do, but they will not spell the end of human existence. There will be no robo-apocalypse.

The emphasis of intelligence testing and computational approaches to intelligence has been on well-structured and formal problems. That is, problems that have a clear goal and a set number of possible solutions. But we humans are creative, irrational, and inconsistent. Focusing on these well-structured problems may be like looking for your lost keys where the light is brightest. There are other problems that are much more typical of human intelligence and deserve a closer look.

One group of these are so-called insight problems. Insight problems generally cannot be solved by a step-by-step procedure, like an algorithm, or if they can, the process is extremely tedious. Instead, insight problems are characterized by a kind of restructuring of the solver’s approach to the problem. In path problems, the solver is given a representation, which includes a starting state, a goal state, and a set of tools or operators that can be applied to move through the representation. In insight problems, the solver is given none of these.

It was an insight problem that supposedly led Archimedes to run naked through the streets of Syracuse when he solved it. As the story goes, Hiero II (270 to 215 BC), the king of Syracuse, suspected that a votive crown that he had commissioned to be placed on the head of a temple statue did not contain all of the gold it was supposed to. Archimedes was tasked with determining whether Hiero had been cheated. He knew that silver was less dense than gold, so if he could measure the volume of the crown along with its weight, he could determine whether it was pure gold or a mixture. The crown shape, however, was irregular, and Archimedes found it difficult to measure its volume accurately using conventional methods.

According to Vitruvius, who wrote about the episode many years later, Archimedes realized, during a trip to the Roman baths, that the more his body sank into the water, the more water was displaced. He used this insight to recognize that he could use the volume of water displaced as a measure of the volume of the crown. Once he achieved that insight, finding out that the crown had, in fact, been adulterated was easy.

It was an insight problem that supposedly led Archimedes to run naked through the streets of Syracuse when he solved it.

The actual method that Archimedes used was probably more complicated than this, but this story illustrates the general outline of insight problems. The irregular shape of the crown made measurement of its volume impossibly difficult by conventional methods. Once Archimedes recognized that the density of the crown could be measured using other methods, the actual solution was easy.

With path problems, the solver can usually assess how close the current state of the system is to the goal state. Most machine learning algorithms depend on this assessment. With insight problems, it is often difficult to determine whether any progress at all has been made until the problem is essentially solved. They’re often associated with the “Eureka effect,” or “Aha! moment,” a sudden realization of a previously incomprehensible solution.

Another example of an insight problem is the socks problem. You are told that there are individual brown socks and black socks in a drawer in the ratio of five black socks for every four brown socks. How many socks do you have to pull out of the drawer to be certain to have at least one pair of either color? Drawing two socks is obviously not enough because they could be of different colors.

Many (educated) people approach this problem as a sampling question. They try to reason from the ratio of black to brown socks how big a sample they would need to be sure to get a complete pair. In reality, however, the ratio of sock colors is a distraction. No matter what the ratio, the correct answer is that you need to draw three socks to be sure to have a matched pair. Here’s why:

With two colors, a draw of three socks is guaranteed to give you one of the following outcomes:

- Black, black, black—pair of black socks

- Black, black, brown—pair of black socks

- Black, brown, brown—pair of brown socks

- Brown, brown, brown—pair of brown socks

The ratio of black to brown socks can affect the relative likelihood of each of these four outcomes, but only these four are possible if three socks are selected. The selection does not even have to be random. Once we have the insight that there are only four possible outcomes, the problem’s solution is easy.

Insight problems are typically posed in such a way that there are multiple ways that they could be represented. Archimedes was stymied as long as he thought about measuring the volume of the crown with a ruler or similar device. People solving the socks problem were stymied as long as they thought of the problem as one requiring the estimate of a probability. How you think about a problem, that is, how you represent what the problem is, can be critical to solving it.

Interesting insight problems typically require the use of a relatively uncommon representation. The socks problem is interesting because, for most us, the problem is most likely to evoke a representation centered on the ratio of 5:4, but this is a red herring. The main barrier to solving problems like this is to abandon the default representation and adopt a more productive one. Once the alternative representation is identified, the rest of the problem-solving process may be very rapid. Laboratory versions of insight problems generally do not require any specific deep technical knowledge. Most of them can be solved by gaining one or two insights that change the nature of how the solver thinks about the problem.

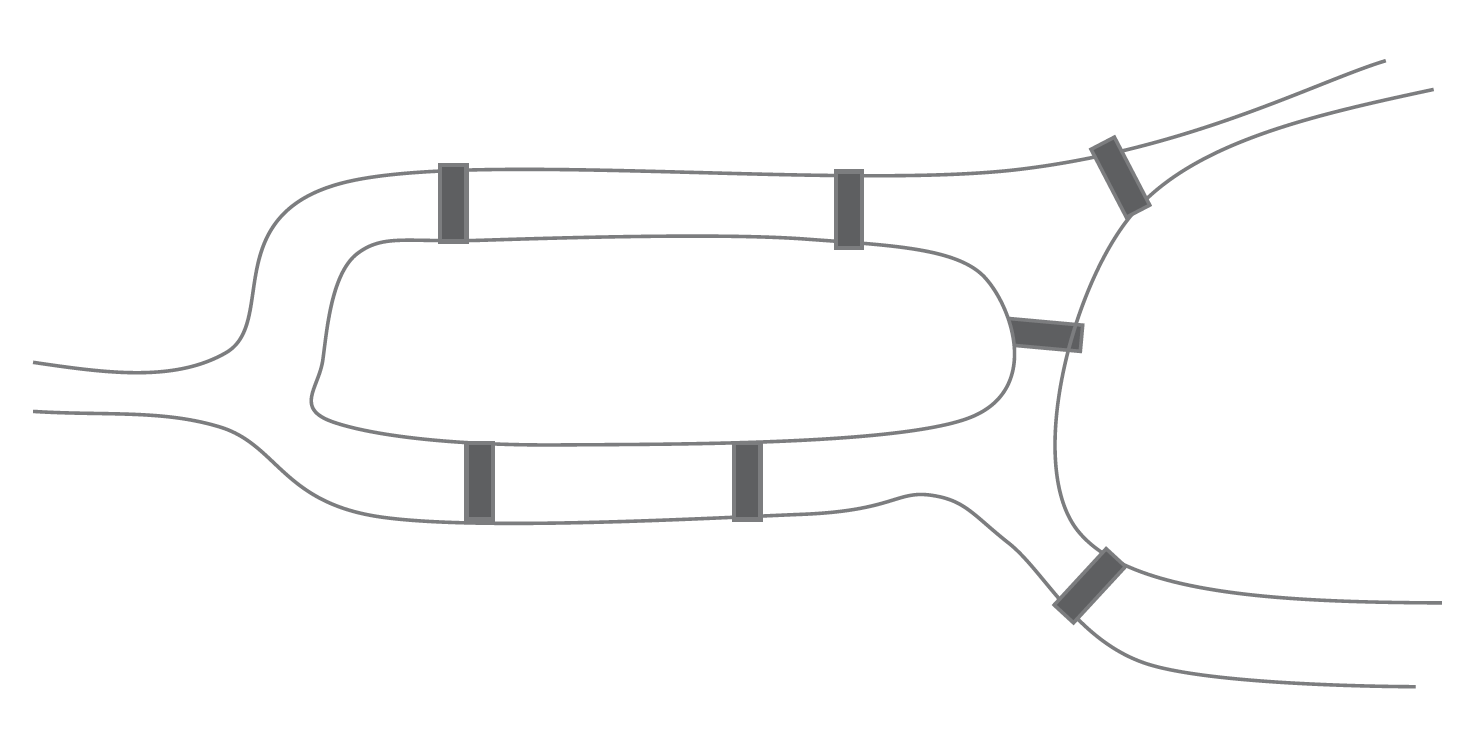

Here are a few more examples. The city of Königsberg (now called Kaliningrad, Russia) was built on both sides of the Pregel River. Seven bridges connected two islands and the two sides of the river. Can you walk through the city, crossing the seven bridges each exactly once? In the map below, the bridges are marked in gray.

Königsberg is divided into four regions. Each bridge connects exactly two regions. Except at the start or the end of the walk, every time one enters a region by a bridge, one must leave the region by a bridge. The number of times one enters must equal the number of times one leaves it, so the number of bridges touching a land mass must be an even number to cross them all exactly once because half of them will be used to enter a region and half will be used to leave it. The only possible exceptions are the regions where you start your walk and where you end it. Only a city with exactly none or exactly two regions with an odd number of bridges (one where you start and one where you finish) can be walked without repetition. In Königsberg, each region is served by an odd number of bridges, so there is no way that one can walk the seven bridges exactly once.

Or how about this one: Here is a sequence of four numbers: 8, 5, 4, 9. Predict the next numbers in this sequence.

If you’re having trouble, try writing out the names of digits in English:

- Eight five four nine

The correct answer is 1, 7, 6.

The full sequence is:

- Eight five four nine one seven six three two zero.

They are listed in alphabetical order of their English names. The usual representation of the series as digits ordered numerically must be replaced by a representation in which the English names are ordered alphabetically.

And here’s one more — the two-strings problem, which was studied by the experimental psychologist Norman Raymond Frederick Maier in 1931. Imagine you are in a room with two strings hanging from the ceiling. Your task is to tie them together. In the room with you and the strings are a table, a wrench, a screwdriver, and a lighter. The strings are far enough apart that you cannot reach them both at the same time. How can these strings be tied together?

The string problem can be solved by using one of the tools as a weight at the end of one of the strings so that you can swing it and catch it while holding the other string. The insight is the recognition that the screwdriver can be used not just to turn screws but also as a pendulum weight.

Relatively little is known about how we solve insight problems. These problems are typically challenging to study in the laboratory with much depth, because it is difficult to ask people to describe the steps that they go through to solve them. We all know that people do not always behave in the systematic ways suggested by logical thought. These deviations are not glitches or bugs in human thought but essential features that enable human intelligence.

Quirks of Human Intelligence

We do not seem ordinarily to pay a lot of attention to the formal parts of a problem, especially when making risky choices. The psychologists Amos Tversky and Daniel Kahneman found that people made different choices when presented with the same alternatives, depending on how these alternatives were described.

Participants in one of their studies were asked to imagine that a new disease threatened the country, from which 600 people would be likely to die. They were further told that two programs had been proposed to treat these people. And they were asked to choose between two treatments. In the first version they were told:

Treatment A will save 200 lives, whereas under Treatment B, there is a 33 percent chance of saving all 600 people and a 66 percent chance of saving no one.

Given this choice, 72 percent of the participants chose treatment A. Being certain to save 200 people was seen to be preferable to the chance that all 600 would be lost.

A second group was given a different version of the same choice:

Under treatment A 400 people will die. Under treatment B, there is a 33 percent chance that no one will die, and a 66 percent chance that all 600 people will die.

In this second version, 22 percent of the participants chose Treatment A. Assuming that people believe that the numbers in each alternative are accurate, Treatment A is identical for both groups. Presumably, 600 people will die if no treatment is selected. In the first version, 200 of these people will be saved, meaning that 400 of them will die. In the second version, 400 people will die, meaning that 200 of them will be saved.

A rational decision maker should be indifferent to these two alternatives, yet the differences in people’s preferences were substantial. The first version emphasized the positive aspects of the alternative, and the second one emphasized the negative. By a dramatic margin, people preferred the positive version.

Psychologists have found that people make different choices when presented with the same alternatives, depending on how the alternatives are described.

It is worth noting that alternative B was also identical under both conditions. The expected number of people to survive under alternative B was also 200, but this alternative also included uncertainty. People preferred the certain outcome over the uncertain one when the certain one was framed in a positive tone and preferred the uncertain alternative when the certain one was framed in a negative tone. The frame or tone of the alternatives controlled the willingness of the participants to accept risk.

From a rational perspective, the effect of positive versus negative framing makes no sense. Formally, these alternatives are identical. One could say that this is an example of human foolishness rather than human intelligence. On the other hand, this error may tell us something important about how we make our decisions. Correct and incorrect decisions are both produced by the same brains/minds/cognitive processes.

Perhaps the apparent irrationality of the choices made in the treatment problem are due to limitations in the way that people can think about a problem in a short amount of time. People seem to have dramatic capabilities in some areas of cognition, but decidedly limited ones in others.

For example, our capacity to recognize pictures from memory is almost limitless. In one demonstration of this phenomenon, people were shown 10,000 images for a few seconds each. They were then tested by being shown two pictures, one of which they had seen and one that they had not seen. They could choose correctly in about 83 percent of these pairs.

On the other hand, when psychologists Raymond Nickerson and Marilyn Adams asked people living in the United States to draw the front and back of a U.S. penny, they found that people were remarkably inept at remembering what was on a coin that they saw practically every day. Of the eight critical features that Nickerson and Adams identified, participants included only about three. Try it, and see what you can come up with. If you think it was because of the low value of the penny or because we don’t use coins much anymore, try recalling other common objects, such as a $1 or $20 bill or your credit card.

Unlike computers, we are relatively limited in what we can keep in active memory at one time. Digit spans were used in some early intelligence tests. In a test of digit spans, the examiner provides a set of random digits (for example, 5, 1, 3, 2, 4, 8, 9) to the person being tested, and the person is supposed to repeat them back immediately. Most healthy adults can repeat back about seven digits.

The typical limitation of about seven items is not limited to just numbers. In 1956, George Miller published a paper called “The Magical Number Seven, Plus or Minus Two.” In it, he noted the wide range of memory and categories where people were limited to handling between five and nine items without making errors.

Miller was among the first cognitive psychologists to talk about memory chunks. We can adopt representations that allow us to expand the number of items that we can keep in mind. Other researchers had a subject who could remember up to 81 digits after extended practice. This person, identified by his initials, SF, increased his memory span by organizing the digits into chunks that were related to familiar facts that he knew about, such as race times (he was an avid runner) or dates.

These and other psychological phenomena show that we have a complexity to our thinking and intellectual processes that is not always in our favor. We jump to conclusions. We are more easily persuaded by arguments that we prefer to be true or that are presented in one context or another. We do sometimes behave like computers, but more often, we are sloppy and inconsistent.

We sometimes behave like computers, but more often, we are sloppy and inconsistent.

Daniel Kahneman describes the human mind as consisting of two systems, one that is fast, relatively inaccurate, and automatic. The other is slow, deliberate, and when it does finally reach a conclusion, more accurate. The first system, he said, is engaged when you see a picture and note that the person in it is angry and is likely to yell. The second system is engaged when you try to solve a multiplication problem like 17 × 32. The recognition of anger, in essence, pops into our mind without any obvious effort, but the math problem requires deliberate effort and maybe a pencil and paper (or a calculator).

Kahneman may be wrong in describing these as two separate systems. They may be part of a continuum of processes, but he is, I think, undoubtedly correct about the existence of these two kinds of processes in human cognition (and maybe ones in between). What he calls the second system is very close to what I call artificial intelligence. It involves deliberate, systematic efforts that require the use of cognitive inventions.

The bat-and-ball problem shows one way that the two kinds of process interact. Try to answer this one as quickly as you can. Let’s say that to buy a bat and a ball costs $1.10. The bat costs $1.00 more than the ball. How much does the ball cost?

Most people’s first response is to say that it costs 10 cents. On reflection, however, that cannot be right because then the total cost of the bat and ball would be $1.20, not $1.10. One dollar is only 90 cents more than 10 cents. The correct answer is that the ball costs 5 cents. Then the bat costs $1.05, which together add up to $1.10. The initial, automatic response can be overridden by a more deliberate analysis of the situation.

Computational intelligence has focused on the kind of work done by the deliberate system, but the automatic system may be just as or more important. And it may be more challenging to emulate in a computer. This rapid learning may sometimes result in inappropriate hasty generalizations (I always get cotton candy at the zoo), but it may also be an important tool in allowing people to learn many things without the huge number of examples that most machine learning systems require. A hasty generalization proceeds from one or a few examples to encompass, sometimes erroneously, a whole class of items. Ethnic prejudices, for example, often derive from a few examples (each of which may be the result of yet another reasoning fallacy, called “confirmation bias”) and extend to large groups of people.

Hardly a day goes by without a call for some kind of regulation of artificial intelligence, either because it is too stupid (for example, face recognition) or imminently too intelligent to be trusted. But good policy requires a realistic view of what the actual capabilities of computers are and what they have the potential to become. If all that is necessary for a machine learning system is to engage its analytic capabilities, then the machine is likely to exceed the capabilities of humans solving similar problems. Analytic problem solving is directly applicable to systems that gain their capabilities through optimization of a set of parameters. On the other hand, if the problem requires divergent thinking, commonsense knowledge, or creativity, then computers will continue to lag behind humans for some time.

As Alan Turing said in 1950, “We can only see a short distance ahead, but we can see plenty there that needs to be done.”

Herbert L. Roitblat is Principal Data Scientist at Mimecast. He is the author of “Algorithms Are Not Enough: Creating General Artificial Intelligence” from which this article is adapted.