The Tyranny of Science Over Mothers

No coffee. No seafood. No bicycles. No deli meat. No alcohol. Almost as soon as she sees the telling double lines, the pregnant woman in modern America is bombarded with new regulations for her body. The dynamic, ever-changing nature of pregnancy seems to induce a state of risk aversion that demonstrates a key argument of our book “Optimal Motherhood and Other Lies Facebook Told Us” quite well: In spaces where scientific evidence is incomplete or unclear, the default is to subject women to more — not fewer — restrictions.

This dynamic is the result of a complex braid of U.S. risk aversion, the legacy of the Victorian Cult of True Womanhood (more on that in a bit), and a neoliberal culture that reveres a reductive version of science that does not make room for complex, nuanced answers. Like the Cult of True Womanhood and its new transfiguration, what we call Optimal Motherhood, these restrictions have a history.

Beginning with the development of germ theory and bacteriology in the 1880s, Western society increasingly believed that if risks could be identified, they could by definition be avoided. The formula was deceptively simple for a society still rocked by post-Darwinian secularism and on the lookout for new modes of understanding and thriving in an often chaotic world. Although the Victorians of the 1880s did not yet have antibiotics or other effective cures for infectious disease, product advertisements in periodicals, often promoting a variety of antiseptic panaceas, spoke to the instantaneous development of a fantasy of a risk-free life. This fantasy (for it remains and will always be a fantasy) was only emboldened by ever-evolving Western medicine, which, as the century rolled over, began to develop cures only imagined in the 1880s.

If something happens to your baby, a complex cocktail of historical demands on women, neoliberalism, and Western risk aversion point the accusing finger straight at the mother.

As the theoretical availability of a risk-free life seemed increasingly possible (in this example, the hope of cures for disease seemed just around the corner), a funny thing happened: Society became generally more paranoid about risk because they felt it was avoidable. To put it another way, the more that perfect safety and health seemed within Western society’s grasp, the more people began to feel a pressure to maintain vigilance and avoid these risks. If disease were theoretically avoidable, it also seemed that everyone ought to do everything they could to avoid it. Thus, the moment disease was no longer seen as an inevitability, neoliberalism swooped in to make it seem like good, responsible people would obviously find ways to successfully avoid such risks.

Hypervigilance itself became nonnegotiable as well. Good, responsible people were always on guard against risk — always watching, always aware — and their careful vigil, like that of an ever-wakeful night watchperson, would ensure that no harm would come. If it did, then it must be the victim’s own fault. To keep with the disease and hygiene examples, as early as 1910, if a child died, a woman might be blamed for neglecting to keep a house clean enough to keep a child safe from disease. Today, your downfall might be your choice of food for your baby or the way your baby sleeps. If something happens to your baby — catastrophic death, or something less serious, like a milestone delay or a tendency toward tantrums — a complex cocktail of historical demands on women, neoliberalism, and Western risk aversion point the accusing finger straight at the mother. She should have tried harder, remained more vigilant, cared more, and enjoyed parenting more.

Technological developments, too, played a major role in awareness of fetal risks. As Lara Freidenfelds notes in her book on the history of miscarriage and pregnancy in the United States, developments in “obstetric care and educational materials advocating self-care during pregnancy made women newly self-conscious about their pregnancies and encouraged them to feel responsible for their pregnancy outcomes.” It seems to have been the mere awareness of risk that led to an increased hypervigilance about risk factors, followed by a belief that such risk factors could be perfectly controlled. The mere possibility of being aware of risks to the fetus, aided by technological advancement, “further fed the expectation that careful planning and loving care ought to produce perfect pregnancies, an expectation belied by the miscarriages that were often confirmed in heartbreaking ways by these same technologies.”

While the demands on maternal health-care decisions for the neonate demonstrate this quite well, we have observed that the transitional state of pregnancy itself elucidates, perhaps better than anything, the aversive reactions to the “unknown” in our culture, in which science is used more to undergird a fear response rather than to encourage women to “proceed with caution.” Freidenfelds notes that by “the 1980s, concern about prenatal exposures and uterine environment soared. Pregnant women came to be seen increasingly in terms of the threat they posed to their expected children’s well-being.”

In fact, we would argue that the body, once pregnant, suddenly becomes a stand-in for risk itself. We suggest this is because the pregnant body is the ultimate unknown: (1) it is corporeally veiled — showing and yet hiding the fetal body within; (2) it is constantly changing by the second, and, therefore, its risk status is always ineffable, always just beyond the graspable present; and, finally, (3) in a culture obsessed with evidence-based medicine, we have very little “good” data about fetal risks.

“Good” Data and Microwaved Bologna

We say very little “good” data because double-blind studies with control groups are impossible, ethically, to conduct on pregnant women. This sort of randomized study is the gold standard for being able to — as closely as we really ever can — make claims of causation.

A simple example will make this clear. Say we want to know whether a given food promotes positive mood during the premenstrual period. Researchers will then conduct a study in which two randomly selected groups of women are randomly assigned to two groups: “food in question” (the one researchers are concerned about) and some “control” food (one that is known not to have any relationship with mood). A research assistant or intern, rather than the researchers themselves, assigns the women to these groups; this is known as blinding the study so that the researchers are not biased in their observations of the women’s resultant moods. The study can also be double-blind, meaning the research participants also do not know which group they are assigned to — for example, all the women receive identical-looking supplements. The researchers would need to protect against the participants mistakenly believing they have an improved mood due to suggestion or a placebo. Additionally, in assessing the participants for signs of improved mood, the researchers need to be free from unconscious bias; and for this reason, double-blind setups are used.

For the purposes of studying fetal risk during pregnancy, the largest issue is less about blinding and more about control groups. It is simply unethical — a researcher would never dare to propose such a thing — to randomly assign a group of pregnant women to eat lead paint daily and another group of pregnant women not to do so and then observe the results. While the impact of ingesting lead paint during pregnancy may seem obvious, this sort of randomized, control-based study would in fact be the only way to claim true causal relationships between lead-paint consumption and fetal risk. An issue like lead paint ingestion, which is known to be harmful to all creatures, may seem like a relatively easy one to develop recommendations about (i.e., probably best avoided entirely, and certainly to be avoided during pregnancy), but pregnancy risk assessments become much murkier in regard to substances that are not necessarily harmful to nonpregnant people. For this reason, most of the known world exists in an odd, “somewhat risky” limbo in regard to pregnancy.

Virtually all pregnancy risks exist because of correlational data and, therefore, are subject to sets of confounding variables that could change the meaning of the data entirely.

With a few exceptions, all the data we have is correlational, and this can take us only so far, because correlational data is much more subject to what are called confounders. Confounders are variables that could inadvertently be responsible for the observed dynamic. Emily Oster, in her book “Expecting Better,” provides a good example of what this could look like regarding coffee. Currently, pregnant women are advised to limit caffeine intake because of correlational data showing a relationship between caffeine consumption and miscarriage. Oster points out that morning sickness could be a potential confounder here. Greater intensity of nausea in early pregnancy, for instance, is associated with lower miscarriage rates. It seems to be that more intense nausea may indicate higher levels of pregnancy hormones and, therefore, the lower chance of pregnancy loss. Perhaps, Oster suggests, women who are less nauseated are simply more inclined to find coffee palatable (whereas a nauseated woman might develop an aversion to coffee). In this scenario, coffee itself would not be responsible for causing pregnancy loss but, instead, is linked to extant chemistry in the woman’s body that already indicates a pregnancy is not going well. This, of course, is speculative, and it is but one example — but it demonstrates the case well. Virtually all pregnancy risks exist because of correlational data and, therefore, are subject to sets of confounding variables that could change the meaning of the data entirely.

Pregnancy decisions are complex decisions, and we live in an increasingly fast-paced society. Little wonder, then, that most women opt to err on the side of caution and adhere to the culture of restriction they find themselves in while pregnant. I, myself, with a background in quantitative research design and now a professor whose research focuses on the social construction of fact and risk, remember scowling each day when I microwaved my deli meat. I was frustrated that I was succumbing to acting on what I knew was a minute risk of listeria in the meat, but I knew I’d never forgive myself if something happened to my baby because I was too self-centered to microwave my bologna (which, to be clear, is disgusting). I felt overly equipped with my unique educational background — pairing critical analysis of study design and risk aversion in modern society — to think for myself about these issues. And yet here I was, microwaving meat anyway, because I had 15 minutes to get to work, I had to eat something, and I was tired from another uncomfortable night’s sleep while very pregnant. It was easiest to microwave the damn bologna.

Many mothers, regardless of background, waddle tiredly through this pathway as well, finding it easier to err on the side of safety than to resist, when resistance is loaded with such high stakes — both physical and existential. How important is that cup of coffee anyway? As we’ve said, by the 1980s, the list of possible risks during pregnancy seemed to grow, less out of clear scientific causal evidence, and more out of a belief in a risk-averse society that for the very reason that scientific evidence was unclear, it was better to be safe than sorry — about everything.

The Roots of Optimal Motherhood: The Cult of True Womanhood

Medical and technological developments alone didn’t get us here. What exactly created our belief that there is an Optimal Mother at all? How have we come to believe that there is a perfect answer to any parenting question to begin with? And when did these standards of perfection become attached to motherhood specifically?

The roots of this belief can be linked to the 19th-century concept of the Cult of True Womanhood. By the 19th century, housewives were seen as the moral force driving the entire household. It was thought that a woman’s purity and dignity permeated her entire house. Before this period, childbearing was seen as an inevitable part of a married woman’s life, something she had to endure as a descendant of a sinful Eve; by the Victorian era, however, a mother’s child-rearing was seen as much more important than her childbearing, Freidenfelds writes. This new emphasis on childrearing meant that maternal perfection was not only valued but demanded.

Previously, women simply bore children. But now they were expected to have an impact on their upbringing in fundamental ways. For Victorians, maternal perfection was achieved through passivity: Women were not to work, even in the domestic sphere, but rather to simply be, and in so doing, spread their purity to their families, strengthening and stabilizing them as strong family units. The standard wisdom of the day held that women had the power (and therefore the obligation) to stabilize the nation itself by creating strong, morally upright families.

Passivity was the name of the game for Victorian women’s norms, whereas assertiveness and activity define Optimal Motherhood today.

In the Victorian era, this concept applied to all aspects of women’s lives as daughters, wives, and mothers (and for middle- and upper-class women, these were indeed most aspects of their lives, as such women were not employable in traditional jobs). The Cult of True Womanhood is still very much with us today, although it has adapted to the norms of our society. Namely, we would argue that as women became more liberated over time, more able to leave the confines of the home, the demands for female perfection narrowed to focus only on motherhood, but with increased intensity. For instance, passivity was the name of the game for Victorian women’s norms, whereas assertiveness and activity define Optimal Motherhood today. A Victorian mother was meant to demonstrate moral purity by example — her sheer presence could, in theory, purify her family and thereby the nation. In fact, some Victorian household guides advised women to outsource childcare so that they could better maintain an image of calmness and perfection for the short periods when they were in the presence of their children. A Victorian mother might, theoretically, have been able to simply demonstrate good and proper behavior to her children. Indeed, many Victorian motherhood guides make room for the possibility that mothers may not deeply enjoy child-rearing but may simply perform “goodness” while around their children to set behavioral examples.

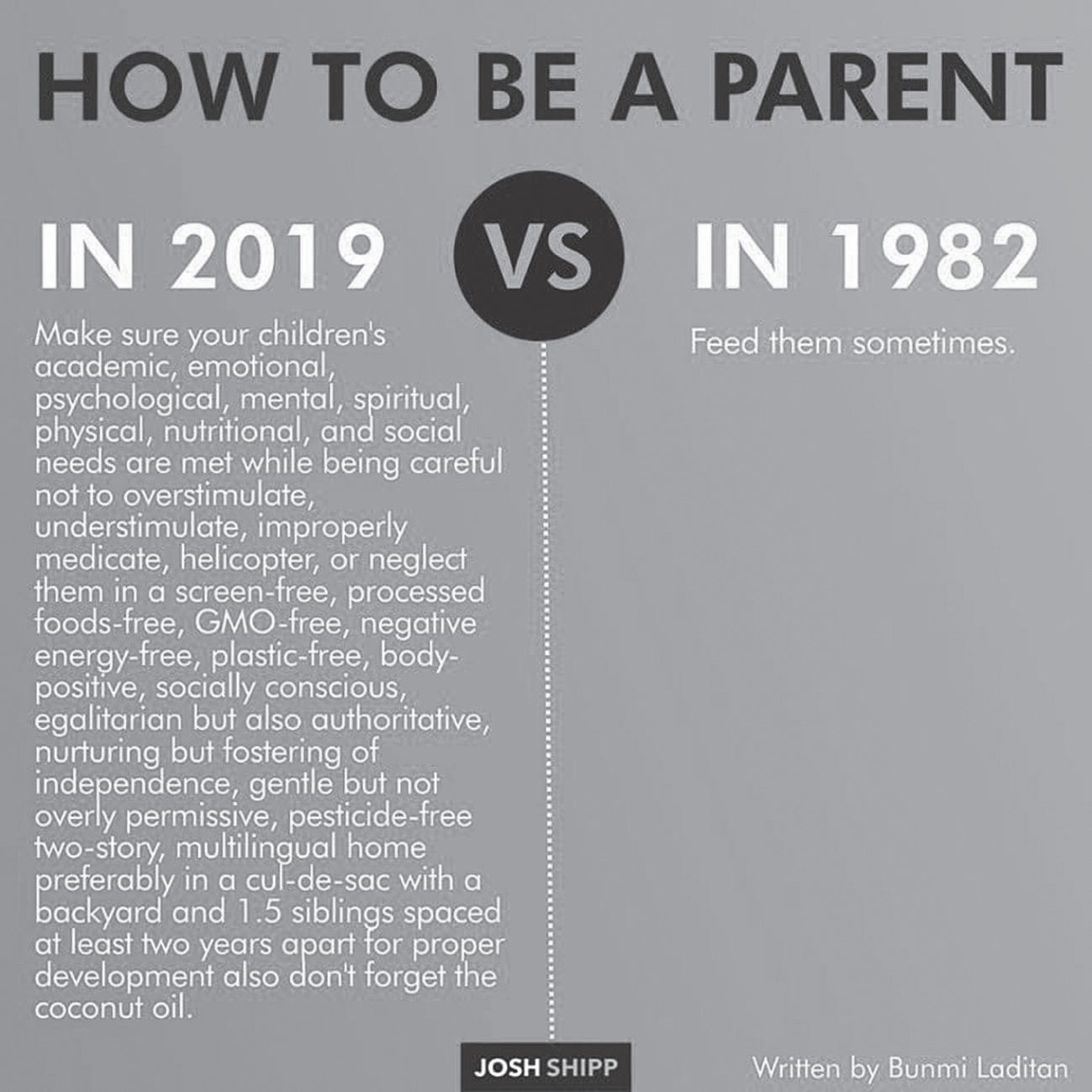

Beginning in the 1900s, women were increasingly involved in the everyday care of their children as domestic servant labor became less common. Yet, linguistic patterns demonstrated that a woman’s primary role was not purely defined by her identity as a mother. It wasn’t until the 1970s that “parent” “gained popularity as a verb,” speaking to this emphasis of “woman” as interchangeable with “mother,” yes, but also suggesting that “mother” or “parent” was not her only or primary identifier, as Jennifer Senior explains in her book on the effects of children on their parents. Consider how previous generations of mothers were apt to call themselves “housewives,” whereas now most women in similar roles use the term “stay-at-home mom.” As Senior notes, “The change in nomenclature reflects the shift in cultural emphasis: the pressures on women have gone from keeping an immaculate house to being an irreproachable mom.” Enter the Optimal Mother. Mothers now had to be not only present and proper (a Victorian notion) but excessively involved as well. And their involvement had to be perfect.

The Victorian roots of the Cult of True Womanhood mixed with the particular flavor of 2000s-era neoliberalism has resulted in the strange alchemical mess that we are calling Optimal Motherhood. Optimal Motherhood is the imperative expressed by the meme above, that a woman is responsible for manifesting and perfecting every aspect of her child’s being: physical, spiritual, emotional, psychological, cognitive, and behavioral. It is simultaneously — and equally as important — the notion that “good mothers” will strive to do these things and do them properly, and “bad mothers” will fail or not try hard enough to do so (or, per attachment theory, not enjoy doing these things well). Reader, it is the mouse nibbling at your sleeve. It is every dilemma of how much screen time to allow, which direction to face a car seat, what clothes are currently least flammable, when to potty train, how to discipline, when to start kindergarten, and, yes, even which damn flavor of kale pouch to get.

But it is also the shadow crisis that follows each mouse nibble: Too much screen time results in poor social development, but what if my kid is the only one who enters elementary school with no digital literacy? Rear-facing is safer, but when are they too big for it? Might they suffer from not seeing our faces enough? Will a mirror make me a less safe driver? Haven’t those fire-retardant chemicals been linked to thyroid cancer? Potty training too early is supposed to be bad, but how else can I get them into the preschool I’ve handpicked and that I’m certain provides the best education for my child? Time-outs are useless, but how do I teach my child that hitting is unacceptable? Early literacy is important, but haven’t new studies shown that we start our children in curricular education too early, thereby stifling creativity?

It’s exhausting.

Kari Nixon is Assistant Professor of English at Whitworth University and a researcher at the Norwegian University of Science and Technology in Trondheim, Norway. She is the author of “Kept from All Contagion” and “Quarantine Life from Cholera to COVID-19” and co-author, with Jessica Clements, of “Optimal Motherhood and Other Lies Facebook Told Us,” from which this article is adapted.

Jessica Clements is Associate Professor of English and Composition Commons Director at Whitworth University in Spokane, Washington, and Managing Editor of Present Tense: A Journal of Rhetoric in Society.