The Remarkable Ways Our Brains Slip Into Synchrony

In the 1970s, an American candy company began an advertising campaign for peanut butter cups. In the television commercial, one person is walking down a busy city sidewalk while eating a chocolate bar, and another person is walking toward them while eating peanut butter from a jar. When they bump into each other, their foods collide. “You got your peanut butter on my chocolate!,” one of them decries, while the other one complains, “You got your chocolate in my peanut butter!” — as if somehow one of those things was right and the other was wrong.

As it turns out, they both find the flavor combination pretty tasty. Something very similar to that candy collision happens when your own cycle of action-and-perception collides with someone else’s. When some object in the environment becomes part of your cognition and is also part of someone else’s, the two cognitions merge.

For instance, you and a friend might be pointing at a map, and talking about it together. Or you might be watching a television show together and sharing observations about it. Or maybe you can recall the first name of a celebrity and your spouse remembers the last name for you. Does that object (or event) out there in the environment belong to your cognition or to the other person’s?

This fluid back-and-forth sharing of information between you and other people is often so dense that it can be difficult to safely treat you and them as separate systems. If a tool that enhances your capabilities can become an extension of “who you are,” what about another person who enhances your capabilities? The information that makes you who you are includes not just the information carried inside your brain, body, and nearby objects but also the information carried by the people around you.

In fact, many of our most influential experiences — team projects, community events, holiday meals — are shared with, and partly shaped by, other people. Whether you’re having a conversation with someone, attending a lecture, coordinating on a project at work, watching TV, or just reading a book, it usually involves information that is being shared among two or more people. There’s an old Spanish proverb that goes, “Dime con quien andas y te diré quien eres”: Tell me with whom you walk, and I’ll tell you who you are.

A growing body of cognitive science research is showing that when two people cooperate on a shared task, their individual actions get coordinated in a way that is remarkably similar to how one person’s limbs get coordinated when he or she performs a solitary task. Take, for example, the solitary task of waggling your two index fingers up and down in opposite sequence. When your right index finger is lifted up, your left index finger is pointing down, and then they trade places, again and again. Try it out. It’s easy. Instead of perfect synchrony, where the fingers would be doing the same thing at the same time, this is a form of syncopation, where the fingers are doing opposite things at the same time.

The information that makes you who you are includes not just the information carried inside your brain, body, and nearby objects but also the information carried by the people around you.

Dynamical systems researcher Scott Kelso discovered that people can do this relatively quickly and stay in antiphase (each finger moving opposite to the other) for a while. However, when they speed up to a really fast finger-waggling pace, they tend to accidentally fall into an in-phase pattern: The fingers unintentionally slip into a pattern where they are both moving up at the same time and then both moving down at the same time. Kelso showed that this accidental tendency toward coordinated finger movements, even when you’re trying to produce anti-coordinated finger movements, fits into a mathematical model that describes how two different subsystems can often behave like one system.

In this case, one of those subsystems is your left hemisphere’s motor cortex trying to do one thing (raise your right finger) and the other subsystem is your right hemisphere’s motor cortex trying to do the opposite (lower your left finger) at the same time. Then they reverse that pattern, again and again. Those two brain regions have several neural connections between them, and those connections make it hard to maintain this anti-coordinated movement pattern when it ramps up to high speed. Your motions slip into synchrony, even when you’re trying to have them stay out of sync. But, you may be asking, what about transmitting information to other people?

Psychologist Richard Schmidt extended Kelso’s experiment to take place with one person’s left leg swinging from the edge of a table that they’re sitting on and another person’s right leg swinging from the same table. Just like Kelso’s two index fingers, the two separate people are supposed to swing their legs in antiphase with one another. One of them swings her leg forward (away from the table) while the other swings his leg backward (under the table). Then they reverse, again and again. And just like Kelso’s two index fingers, as these two people speed up their antiphase leg movements, they tend to slip into an in-phase pattern.

Clearly, this is not the result of the corpus callosum, which connects those left and right motor cortices to each other, connecting one person’s motor cortex and the other person’s motor cortex. Evidently, neural connections are not the only thing that can provide the sharing of information that synchronizes two different subsystems. Your own action-perception cycle can share information back and forth with someone else’s action-perception cycle, in a manner that makes the two of you synchronize and behave a little bit like one system.

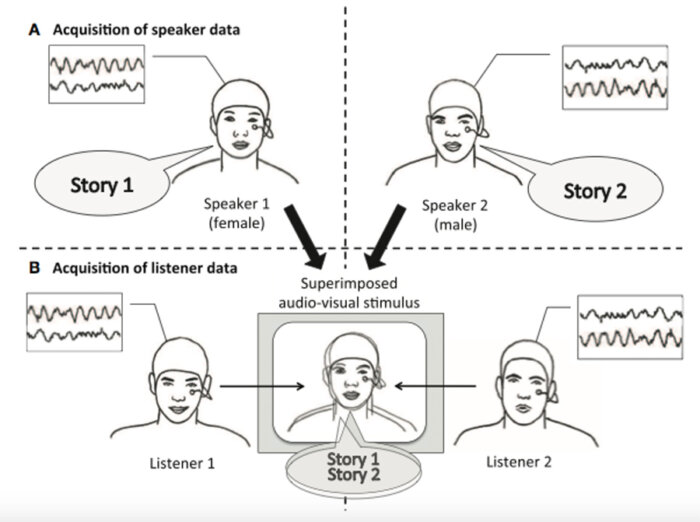

A dramatic example of this sharing of information across two brains comes from an ingenious experiment conducted by experimental psychologist Anna Kuhlen. Kuhlen recorded brain activity (with EEG) of a woman telling a story and made a video of the woman at the same time. She repeated this with a man telling a different story. Then she superimposed the two videos on each other, making a kind of ghostly image of both talking heads and an overlay of the audio tracks from both stories. When you watch and listen to this video, it can be slightly distracting, but if you decide to pay attention to one of the speakers and ignore the other, you can do it. That’s exactly what Kuhlen asked her next set of experiment participants to do, while she recorded their brain activity.

Thus, she had several minutes of brain activity from the female speaker, from the male speaker, from listeners who were told to focus on the female speaker, and from listeners who were told to focus on the male speaker. As predicted, listeners who focused on the female speaker produced brain activity that was statistically correlated with the brain activity of the female speaker (and less correlated with the brain activity of the male speaker), and vice versa for listeners who focused on the male speaker. Essentially, listening to someone tell you a story makes your brain do some of the same kinds of things that the speaker’s brain was doing.

Listening to someone tell you a story makes your brain do some of the same kinds of things that the speaker’s brain was doing.

But you don’t have to be recording brain activity to see these correlations between speaker and listener emerge. If two brains are highly correlated, then it’s a safe bet that the movements carried out by the bodies attached to those brains are also going to be correlated.

My students Daniel Richardson and Rick Dale recorded a speaker’s eye movements while she looked at a grid of six pictures of cast members from the TV show Friends and told an unscripted story about her favorite episode. Just the audio recording of that story was later played over headphones for listeners who were also looking at that grid of six pictures and having their own eye movements recorded. They couldn’t see the speaker, but they could hear her voice. Over the course of the few minutes of story, there was a remarkable correlation between the eye movements of the speaker and the eye movements of the listeners, with a lag of about 1.5 seconds.

Think of it this way: The speaker would look at a cast member’s face and a half-second later say that character’s name. Then, the listener’s brain would take a half second to process hearing the name and then another half second to direct the eyes to that cast member’s face. There’s your 1.5-second lag. Interestingly, listeners whose eye movements had a higher correlation with those of the speaker also answered comprehension questions faster at the end of the experiment. Listeners whose eye movements were more synchronized with the speaker’s eye movements appeared to have absorbed the information from the story in a more efficient manner.

All this means that, when processing language, the listener (or reader?) will tend to exhibit some brain activity and eye movements that are similar to the brain activity and eye movements produced by the speaker (or writer?). That’s right; while you are reading this paragraph, your brain activity and eye movements are probably somewhat correlated with the brain activity and eye movements that I produced while proofreading this paragraph. No matter who you are, no matter when you read this, a time slice of you and a time slice of me are briefly “on the same page,” both literally and figuratively, both physically and metaphysically.

No matter who you are, no matter when you read this, a time slice of you and a time slice of me are briefly “on the same page.”

This physical and mental coordination is even more impressive when the two people are simultaneously present with each other and contributing equally to the conversation. With the help of Natasha Kirkham, Richardson and Dale expanded their eye-tracking experiment to work with two people in an unscripted dialogue. They put two eye-tracking machines in two separate rooms and had two people view the same painting while talking with each other over headsets.

Imagine being in this experiment. You’re alone in a room looking at a picture, but you get to talk to someone else on your headset who is in another room and looking at the same picture. It’s a bit like calling up a friend on the phone and watching a live sports game on TV together, like my buddy Steve and I do. While their eye movements were being recorded, these experiment participants talked about the painting that they were both looking at. When you analyze the transcript of the conversation, you see numerous interruptions and completions of each other’s sentences, because an important part of how we understand what a person is saying is by anticipating what’s coming next out of their mouth.

Importantly, since the two people in the conversation were anticipating each other’s words and thoughts so closely, that 1.5-second lag in the correlation between eye movements disappeared. People were looking at the same parts of the same image at the same time. Their eye movements had slipped into genuine synchrony, not unlike Kelso’s waggling fingers and Schmidt’s swinging legs.

When two brains get correlated by a shared conversation, it’s not just the brains and eyes that produce coordinated behavior. It’s the hands, the heads, and the spines, too. The two bodies get coordinated in ways in which they weren’t coordinated before the conversation began.

For example, human movement researcher Kevin Shockley had two people talk to each other about a shared puzzle while they stood on force plates that recorded their postural sway. Even when you think you’re standing still, your body’s center of mass still sways back and forth by a few millimeters. And when Shockley’s participants were talking with each other about this puzzle, their postural sways became correlated with one another. When a pair of participants talked with other people about the puzzle, their postural sways were no longer correlated with each other. Some of that coordination of postural sway may have resulted from hand gestures they made together or head nods they made to each other in the back and forth of the conversation.

When cognitive scientist Max Louwerse focused on the gestures and nods that people produce during a conversation about a shared puzzle, he found that specific kinds of gestures showed correlations at different lag times. For instance, coordinated head nods happen about one second apart from one another. Essentially, one person proposes something and then nods her head as a form of requesting confirmation from the other person, and then the other person nods back to give that confirmation.

Social mimicry, however, happens on a different timescale. People often mimic one another without realizing it, like crossing your legs in a meeting after someone else crossed their legs, interlacing your fingers and resting them on the back of your head after someone else did, or rubbing your chin after someone else did. Louwerse found in his experiment that when one speaker touches their face, the other speaker is also statistically likely to touch their face — not one second later like the head nods but more like 20–30 seconds later. This accidental motor coordination tends to induce more empathy and rapport between the people involved. The shared conversation not only improves the processing of shared motor output but can also improve the processing of shared perceptual input. How do two people “share perceptual input,” you ask? Good question.

Cognitive scientist Riccardo Fusaroli examined the conversation transcripts of two people performing a shared perception task with about a hundred experimental trials. On each trial, each person carried out a difficult visual discrimination task and then discussed their individual responses to reach a consensus for a joint response to the visual stimulus. Occasionally, their individual responses differed, and the joint response would require that one of them change their answer.

Imagine doing this experiment with a close friend. You see a brief flash of several visual stimuli and have to decide which stimulus was the odd one out. You make your guess by pressing a button, and then you find out that your friend made a different response. Now the two of you have to decide together which of you was right. Fusaroli found that some of the conversation transcripts didn’t show much correlation in their use of expressions of certainty (for example, one person might use phrases like “confident,” “slightly unsure,” or “completely uncertain,” while the other used phrases like “I dunno” and “maybe”).

In those pairs of people, the joint responses tended not to provide any improvement compared to the responses of the best individual responder in the pair. For example, sometimes a very accurate responder can give in too often to a less accurate responder, and their joint responses can be worse than the very accurate responder’s individual performance. However, Fusaroli found that when a pair of participants showed strong coordination between them in their expressions of certainty, using the same kinds of phrases, their joint responses outperformed that of their best individual responder.

Essentially, the pair is developing their own miniature language that allows them to calibrate each other’s level of confidence in their perception of a particular visual stimulus.

Essentially, the pair is developing their own miniature language that allows them to calibrate each other’s level of confidence in their perception of a particular visual stimulus. This linguistic coordination made their two brains act like one system, with better performance than either brain alone. I guess two heads really are better than one — particularly when they are “on the same page” about how to talk about what they are seeing.

These correlations between two people mean that if you knew what kinds of gestures, brainwaves, eye movements, and word choices one speaker in a conversation was making, then you could make moderately good predictions about what kinds of gestures, brainwaves, eye movements, and word choices the other speaker would be making. This is because that collection of brainwaves and behaviors (emanating from two people) is becoming one system.

So when you finally get together with your friends and family again — or perhaps via Zoom during this pandemic — remember this: A good conversation is not really a collection of individuals taking turns sending complete messages to each other. They’re not sequentially adding messages to the dialogue. That’s a bad conversation. A good conversation involves people simultaneously co-creating a shared monologue — a kind of mutual synchronization of the brains.

Michael J. Spivey is Professor of Cognitive Science at the University of California, Merced, and the author of “The Continuity of Mind” and “Who You Are,” from which this article is adapted.